Abstract.

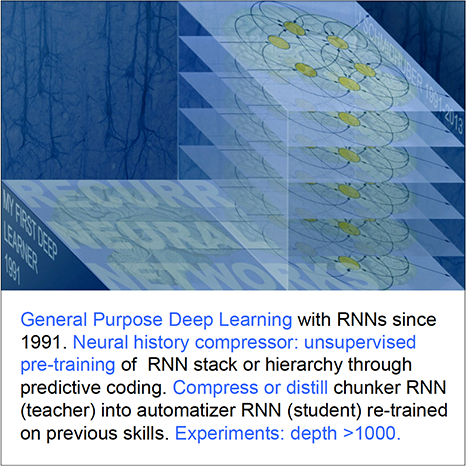

In 2021, we are celebrating the 30-year anniversary of

my

first very deep learning machine

of 1991:

the

neural sequence chunker

aka

neural history compressor

[UN0-UN2]. It uses

unsupervised learning and predictive coding

in a deep hierarchy of recurrent neural networks (RNNs)

to find compact internal

representations of long sequences of data.

This greatly facilitates downstream supervised deep learning such as sequence classification.

By 1993, the approach solved problems of depth 1000

(requiring 1000 subsequent computational stages/layers—the more such stages, the deeper the learning).

A variant collapses the hierarchy into a single deep net.

It uses a so-called conscious chunker RNN

which attends to unexpected events that surprise

a lower-level so-called subconscious automatiser RNN.

The chunker learns to understand the surprising events by predicting them.

The automatiser uses my

neural knowledge distillation procedure

of 1991

[UN0-UN2]

to compress and absorb the formerly conscious insights and

behaviours of the chunker, thus making them subconscious.

The systems of 1991 allowed for much deeper learning than previous methods.

Today's most powerful neural networks

(NNs) tend to be very deep, that is, they have many layers of neurons or many subsequent computational stages.

In the 1980s, however, gradient-based training did not work well for deep NNs, only for shallow

ones [DL1-2].

This deep learning problem

was perhaps most obvious for recurrent NNs (RNNs),

informally proposed in 1945 [MC43],

then formalised in 1956 [K56]

(but don't forget prior related work in physics since the 1920s [L20]

[I25]

[K41]

[W45]).

Like the human brain,

but unlike the more limited feedforward NNs (FNNs),

RNNs have feedback connections.

This makes RNNs powerful,

general purpose, parallel-sequential computers

that can process input sequences of arbitrary length (think of speech or videos).

RNNs can in principle implement any program that can run on your laptop.

Proving this is simple: since a few neurons can implement a NAND gate,

a big network of neurons can implement a network of NAND gates.

This is sufficient to emulate the microchip powering your laptop. Q.E.D.

If we want to build an artificial general intelligence (AGI),

then its underlying computational substrate must be something like an RNN—standard FNNs

are fundamentally insufficient.

In particular, unlike FNNs, RNNs can in principle deal with problems

of arbitrary depth, that is, with data sequences of arbitrary length whose processing may require

an a priori unknown number of subsequent computational steps [DL1].

Early RNNs of the 1980s, however, failed to learn very deep problems in practice—compare [DL1-2] [MOZ].

I wanted to overcome this drawback, to achieve

RNN-based general purpose deep learning.

In particular, unlike FNNs, RNNs can in principle deal with problems

of arbitrary depth, that is, with data sequences of arbitrary length whose processing may require

an a priori unknown number of subsequent computational steps [DL1].

Early RNNs of the 1980s, however, failed to learn very deep problems in practice—compare [DL1-2] [MOZ].

I wanted to overcome this drawback, to achieve

RNN-based general purpose deep learning.

In 1991, my first idea to solve the deep learning problem mentioned above was to

facilitate supervised learning in deep RNNs through

unsupervised pre-training of a hierarchical stack of RNNs.

This led to the first very deep learner called the

Neural Sequence Chunker [UN0] or

Neural History Compressor [UN1].

In this architecture, each higher level RNN tries to

reduce the description length (or negative log probability)

of the data representations in the levels below.

This is done

using the Predictive Coding trick: while trying to predict the next input in the incoming data stream using the previous inputs, only update neural activations in the case of unpredictable data so that only what is not yet known is stored.

In other words, given a training set of observation sequences,

the chunker learns to compress typical data streams such that the

deep learning problem

becomes less severe, and can be solved by gradient descent through standard backpropagation,

an old technique from 1970 [BP1-4] [R7].

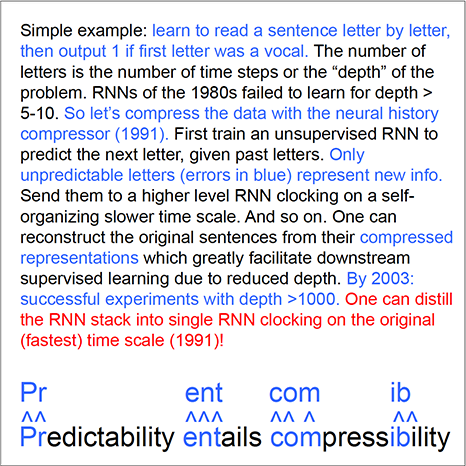

Let us consider an example. At the bottom of the text box, one can read the sentence "Predictability entails compressibility." That's what the lowest level RNN observes, one letter at a time. It is trained in unsupervised fashion to predict the next letter, given the previous letters. The first letter is a "P". It was not correctly predicted. So it is sent (as raw data) to the next level which sees only the unpredictable letters from the lower level. The second letter "r" is also unpredicted. But then, in this example, the lower RNN starts predicting well, because it was pre-trained on a set of typical sentences. The higher level updates its activations only when there is an unexpected error on the lower level. That is, the higher level RNN is operating or "clocking" more slowly because it clocks only in response to unpredictable observations. What's predictable is compressible. Given

the RNN stack and relative positional encodings of the time intervals between unexpected events [UN0-1],

one can reconstruct the exact original sentence from its compressed representation. Finally, the reduced depth on the top level can greatly facilitate downstream learning, e.g., supervised sequence classification [UN0-2].

Let us consider an example. At the bottom of the text box, one can read the sentence "Predictability entails compressibility." That's what the lowest level RNN observes, one letter at a time. It is trained in unsupervised fashion to predict the next letter, given the previous letters. The first letter is a "P". It was not correctly predicted. So it is sent (as raw data) to the next level which sees only the unpredictable letters from the lower level. The second letter "r" is also unpredicted. But then, in this example, the lower RNN starts predicting well, because it was pre-trained on a set of typical sentences. The higher level updates its activations only when there is an unexpected error on the lower level. That is, the higher level RNN is operating or "clocking" more slowly because it clocks only in response to unpredictable observations. What's predictable is compressible. Given

the RNN stack and relative positional encodings of the time intervals between unexpected events [UN0-1],

one can reconstruct the exact original sentence from its compressed representation. Finally, the reduced depth on the top level can greatly facilitate downstream learning, e.g., supervised sequence classification [UN0-2].

To my knowledge, the Neural Sequence Chunker [UN0]

was also the first system made of RNNs operating on different

(self-organizing) time scales.

Although computers back then were about a million times slower per dollar than today,

by 1993, the neural history compressor was able to solve previously unsolvable

very deep learning tasks of depth > 1000 [UN2], i.e., tasks requiring

more than 1000 subsequent computational stages—the more such stages, the deeper the learning.

I also had a way of compressing or distilling

all those RNNs down into a single deep RNN operating on the original, fastest time scale.

This method is described in

Section 4 of the 1991 reference [UN0] on a

"conscious" chunker

and a "subconscious" automatiser, which

introduced a general principle for

transferring the knowledge of one NN to another—compare

Sec. 2 of [MIR].

To understand this method,

first suppose a teacher NN has learned to predict (conditional expectations of) data,

given other data. Its knowledge can be compressed into a student NN,

by training the student NN to imitate the behavior and internal representations of the teacher NN

(while also re-training the student NN on previously learned skills such that it does not forget them).

I called this collapsing or compressing the behavior of one net into another.

Today, this is widely used,

and also called distilling [HIN] [T20] [R4] or cloning the

behavior of a teacher net into a student net.

To summarize, one can compress or distill the RNN hierarchy

down into the original RNN clocking on the fastest time scale.

Then we get a single RNN which solves the entire deep, long time lag problem.

To my knowledge, this was the first very deep learning compressed into a single NN.

I also had a way of compressing or distilling

all those RNNs down into a single deep RNN operating on the original, fastest time scale.

This method is described in

Section 4 of the 1991 reference [UN0] on a

"conscious" chunker

and a "subconscious" automatiser, which

introduced a general principle for

transferring the knowledge of one NN to another—compare

Sec. 2 of [MIR].

To understand this method,

first suppose a teacher NN has learned to predict (conditional expectations of) data,

given other data. Its knowledge can be compressed into a student NN,

by training the student NN to imitate the behavior and internal representations of the teacher NN

(while also re-training the student NN on previously learned skills such that it does not forget them).

I called this collapsing or compressing the behavior of one net into another.

Today, this is widely used,

and also called distilling [HIN] [T20] [R4] or cloning the

behavior of a teacher net into a student net.

To summarize, one can compress or distill the RNN hierarchy

down into the original RNN clocking on the fastest time scale.

Then we get a single RNN which solves the entire deep, long time lag problem.

To my knowledge, this was the first very deep learning compressed into a single NN.

In 1993, we also published a

Neural History Compressor without varying time scales where the time-varying "update strengths" of a higher level RNN depend

on the magnitudes of the surprises in the level below [UN3].

Soon after the advent of the unsupervised pre-training-based very deep learner above,

the fundamental deep learning problem (first analyzed in 1991 by my student Sepp Hochreiter [VAN1]—see Sec. 3 of [MIR] and item (2) of Sec. XVII of [T20]) was also overcome

through purely supervised Long Short-Term Memory or LSTM—see

Sec. 4 of [MIR]

(and Sec. A & B of [T20]).

Subsequently, this new type of superior supervised learning made unsupervised pre-training less important,

and LSTM drove much of the supervised deep learning revolution [DL4] [DEC].

Between 2006 and 2011, my lab also drove

a very similar shift from unsupervised pre-training to pure supervised learning,

this time for the simpler

feedforward NNs (FNNs)

[MLP1-2] [R4]

rather

than recurrent NNs (RNNs). This led to revolutionary applications

to

image recognition [DAN]

and

cancer detection, among many other problems. See Sec. 19 of [MIR].

Soon after the advent of the unsupervised pre-training-based very deep learner above,

the fundamental deep learning problem (first analyzed in 1991 by my student Sepp Hochreiter [VAN1]—see Sec. 3 of [MIR] and item (2) of Sec. XVII of [T20]) was also overcome

through purely supervised Long Short-Term Memory or LSTM—see

Sec. 4 of [MIR]

(and Sec. A & B of [T20]).

Subsequently, this new type of superior supervised learning made unsupervised pre-training less important,

and LSTM drove much of the supervised deep learning revolution [DL4] [DEC].

Between 2006 and 2011, my lab also drove

a very similar shift from unsupervised pre-training to pure supervised learning,

this time for the simpler

feedforward NNs (FNNs)

[MLP1-2] [R4]

rather

than recurrent NNs (RNNs). This led to revolutionary applications

to

image recognition [DAN]

and

cancer detection, among many other problems. See Sec. 19 of [MIR].

Of course, deep learning in feedforward NNs started much earlier, with Ivakhnenko & Lapa, who published the first general, working learning algorithms for deep multilayer perceptrons with arbitrarily many layers back in 1965 [DEEP1]. For example, Ivakhnenko's paper from 1971 [DEEP2] already described a deep learning net with 8 layers, trained by a highly cited method still popular in the new millennium [DL2]. But unlike the deep FNNs of Ivakhnenko and his successors of the 1970s and 80s, our deep RNNs had general purpose parallel-sequential computational architectures [UN0-3]. By the early 1990s, most NN research was still limited to rather shallow nets with fewer than 10 subsequent computational stages, while our methods already enabled over 1000 such stages.

Finally let me emphasize that the above-mentioned

supervised deep learning revolutions of

the early 1990s (for recurrent NNs) [MIR]

and of

2010 (for feedforward NNs)

[MLP1-2] did

not kill unsupervised learning.

For example, pre-trained language models are now heavily

used by

feedforward Transformers which

excel at the traditional LSTM domain of

Natural Language Processing [TR1] [TR2]

(although there are still many language tasks that LSTM can

rapidly learn to solve quickly [LSTM13]

while plain Transformers can't yet).

And

our active & generative unsupervised NNs since

1990

[AC90-AC20] [PLAN]

are still used to endow agents with

artificial curiosity [MIR] (Sec. 5 & Sec. 6)—see also a special case of our adversarial NNs [AC90b] called GANs [AC20] [R2] [PLAN]

[T20] (Sec. XVII).

Unsupervised learning still has a bright future!

Acknowledgments

Thanks to several expert reviewers for useful comments. Since science is about self-correction, let me know under juergen@idsia.ch if you can spot any remaining error. The contents of this article may be used for educational and non-commercial purposes, including articles for Wikipedia and similar sites. This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Thanks to several expert reviewers for useful comments. Since science is about self-correction, let me know under juergen@idsia.ch if you can spot any remaining error. The contents of this article may be used for educational and non-commercial purposes, including articles for Wikipedia and similar sites. This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

References

[UN0]

J. Schmidhuber.

Neural sequence chunkers.

Technical Report FKI-148-91, Institut für Informatik, Technische

Universität München, April 1991.

PDF. Later published as [UN1].

[First working Deep Learner based on a deep RNN hierarchy (with different self-organising time scales), overcoming the vanishing gradient problem through unsupervised pre-training and predictive coding. Also: compressing or distilling a teacher net (the chunker) into a student net (the automatizer) that does not forget its old skills—such approaches are now widely used. More.]

[UN1]

J. Schmidhuber. Learning complex, extended sequences using the principle of history compression. Neural Computation, 4(2):234-242, 1992. Based on TR FKI-148-91, TUM, 1991 [UN0]. PDF.

[UN2] J. Schmidhuber. Habilitation thesis, TUM, 1993. PDF.

[An ancient experiment on "Very Deep Learning" with credit assignment across 1200 time steps or virtual layers and unsupervised pre-training for a stack of recurrent NNs

can be found here (depth > 1000).]

[UN3]

J. Schmidhuber, M. C. Mozer, and D. Prelinger.

Continuous history compression.

In H. Hüning, S. Neuhauser, M. Raus, and W. Ritschel, editors,

Proc. of Intl. Workshop on Neural Networks, RWTH Aachen, pages 87-95.

Augustinus, 1993.

[SNT]

J. Schmidhuber, S. Heil (1996).

Sequential neural text compression.

IEEE Trans. Neural Networks, 1996.

PDF.

[Neural predictive coding for auto-regressive language models. Earlier version at NIPS 1995.]

[CATCH]

J. Schmidhuber. Philosophers & Futurists, Catch Up! Response to The Singularity.

Journal of Consciousness Studies, Volume 19, Numbers 1-2, pp. 173-182(10), 2012.

PDF.

[CON16]

J. Carmichael (2016).

Artificial Intelligence Gained Consciousness in 1991.

Why A.I. pioneer Jürgen Schmidhuber is convinced the ultimate breakthrough already happened.

Inverse, 2016. Link.

[MLP1] D. C. Ciresan, U. Meier, L. M. Gambardella, J. Schmidhuber. Deep Big Simple Neural Nets For Handwritten Digit Recognition. Neural Computation 22(12): 3207-3220, 2010. ArXiv Preprint (1 March 2010).

[Showed that plain backprop for deep standard NNs is sufficient to break benchmark records, without any unsupervised pre-training.]

[MLP2] J. Schmidhuber

(Sep 2020). 10-year anniversary of supervised deep learning breakthrough (2010). No unsupervised pre-training. The rest is history

[DAN] J. Schmidhuber

(Jan 2021). 2011: DanNet triggers deep CNN revolution.

[MIR] J. Schmidhuber (2019). Deep Learning: Our Miraculous Year 1990-1991. See also arxiv:2005.05744.

[DEC] J. Schmidhuber (2020). The 2010s: Our Decade of Deep Learning / Outlook on the 2020s.

[PLAN] J. Schmidhuber (2020). 30-year anniversary of planning & reinforcement learning with recurrent world models and artificial curiosity (1990).

[MOZ]

M. Mozer.

A Focused Backpropagation Algorithm for Temporal Pattern Recognition.

Complex Systems, vol 3(4), p. 349-381, 1989.

[DL1] J. Schmidhuber, 2015.

Deep Learning in neural networks: An overview. Neural Networks, 61, 85-117.

More.

[DL2] J. Schmidhuber, 2015.

Deep Learning.

Scholarpedia, 10(11):32832.

[DL4] J. Schmidhuber, 2017.

Our impact on the world's most valuable public companies: 1. Apple, 2. Alphabet (Google), 3. Microsoft, 4. Facebook, 5. Amazon ....

[HIN] J. Schmidhuber (2020). Critique of 2019 Honda Prize.

[T20] J. Schmidhuber (2020). Critique of 2018 Turing Award for deep learning.

[AC90]

J. Schmidhuber.

Making the world differentiable: On using fully recurrent

self-supervised neural networks for dynamic reinforcement learning and

planning in non-stationary environments.

Technical Report FKI-126-90, TUM, Feb 1990, revised Nov 1990.

PDF.

This report

introduced a whole bunch of concepts that are now widely used:

Planning with recurrent world models

([MIR], Sec. 11),

high-dimensional reward signals as extra NN inputs / general value functions

([MIR], Sec. 13),

deterministic policy gradients

([MIR], Sec. 14),

unsupervised NNs that are both generative and adversarial

([MIR], Sec. 5), for Artificial Curiosity and related concepts.

[AC90b]

J. Schmidhuber.

A possibility for implementing curiosity and boredom in

model-building neural controllers.

In J. A. Meyer and S. W. Wilson, editors, Proc. of the

International Conference on Simulation

of Adaptive Behavior: From Animals to

Animats, pages 222-227. MIT Press/Bradford Books, 1991.

PDF.

Based on [AC90].

More.

[AC91b]

J. Schmidhuber.

Curious model-building control systems.

Proc. International Joint Conference on Neural Networks,

Singapore, volume 2, pages 1458-1463. IEEE, 1991.

PDF.

[AC95]

J. Storck, S. Hochreiter, and J. Schmidhuber.

Reinforcement-driven information acquisition in non-deterministic

environments.

In Proc. ICANN'95, vol. 2, pages 159-164.

EC2 & CIE, Paris, 1995.

PDF.

[AC97]

J. Schmidhuber.

What's interesting?

Technical Report IDSIA-35-97, IDSIA, July 1997.

[AC99]

J . Schmidhuber.

Artificial Curiosity Based on Discovering Novel Algorithmic

Predictability Through Coevolution.

In P. Angeline, Z. Michalewicz, M. Schoenauer, X. Yao, Z.

Zalzala, eds., Congress on Evolutionary Computation, p. 1612-1618,

IEEE Press, Piscataway, NJ, 1999.

[AC02]

J. Schmidhuber.

Exploring the Predictable.

In Ghosh, S. Tsutsui, eds., Advances in Evolutionary Computing,

p. 579-612, Springer, 2002.

PDF.

[AC06]

J. Schmidhuber.

Developmental Robotics,

Optimal Artificial Curiosity, Creativity, Music, and the Fine Arts.

Connection Science, 18(2): 173-187, 2006.

PDF.

[AC10]

J. Schmidhuber. Formal Theory of Creativity, Fun, and Intrinsic Motivation (1990-2010). IEEE Transactions on Autonomous Mental Development, 2(3):230-247, 2010.

IEEE link.

PDF.

[AC11]

Sun Yi, F. Gomez, J. Schmidhuber.

Planning to Be Surprised: Optimal Bayesian Exploration in Dynamic Environments.

In Proc. Fourth Conference on Artificial General Intelligence (AGI-11),

Google, Mountain View, California, 2011.

PDF.

[AC13]

J. Schmidhuber.

POWERPLAY: Training an Increasingly General Problem Solver by Continually Searching for the Simplest Still Unsolvable Problem.

Frontiers in Cognitive Science, 2013.

Preprint (2011):

arXiv:1112.5309 [cs.AI]

[AC20]

J. Schmidhuber. Generative Adversarial Networks are Special Cases of Artificial Curiosity (1990) and also Closely Related to Predictability Minimization (1991).

Neural Networks, Volume 127, p 58-66, 2020.

Preprint arXiv/1906.04493.

[R2] Reddit/ML, 2019. J. Schmidhuber really had GANs in 1990.

[VAN1] S. Hochreiter. Untersuchungen zu dynamischen neuronalen Netzen. Diploma thesis, TUM, 1991 (advisor J. Schmidhuber). PDF.

[First analysis of the problem of vanishing or exploding gradients in deep networks. More on this fundamental deep learning problem.]

[LSTM1] S. Hochreiter, J. Schmidhuber. Long Short-Term Memory. Neural Computation, 9(8):1735-1780, 1997. PDF.

More.

[LSTM2] F. A. Gers, J. Schmidhuber, F. Cummins. Learning to Forget: Continual Prediction with LSTM. Neural Computation, 12(10):2451-2471, 2000.

PDF.

[The "vanilla LSTM architecture" with forget gates

that everybody is using today, e.g., in Google's Tensorflow.]

[LSTM13]

F. A. Gers and J. Schmidhuber.

LSTM Recurrent Networks Learn Simple Context Free and

Context Sensitive Languages.

IEEE Transactions on Neural Networks 12(6):1333-1340, 2001.

PDF.

[TR1]

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, I. Polosukhin (2017). Attention is all you need. NIPS 2017, pp. 5998-6008.

[TR2]

J. Devlin, M. W. Chang, K. Lee, K. Toutanova (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. Preprint arXiv:1810.04805.

[DEEP1]

Ivakhnenko, A. G. and Lapa, V. G. (1965). Cybernetic Predicting Devices. CCM Information Corporation. [First working Deep Learners with many layers, learning internal representations.]

[DEEP1a]

Ivakhnenko, Alexey Grigorevich. The group method of data of handling; a rival of the method of stochastic approximation. Soviet Automatic Control 13 (1968): 43-55.

[DEEP2]

Ivakhnenko, A. G. (1971). Polynomial theory of complex systems. IEEE Transactions on Systems, Man and Cybernetics, (4):364-378.

[BPA]

H. J. Kelley. Gradient Theory of Optimal Flight Paths. ARS Journal, Vol. 30, No. 10, pp. 947-954, 1960.

[BP1] S. Linnainmaa. The representation of the cumulative rounding error of an algorithm as a Taylor expansion of the local rounding errors. Master's Thesis (in Finnish), Univ. Helsinki, 1970.

See chapters 6-7 and FORTRAN code on pages 58-60.

PDF.

See also BIT 16, 146-160, 1976.

Link.

[The first publication on "modern" backpropagation, also known as the reverse mode of automatic differentiation.]

[BP2] P. J. Werbos. Applications of advances in nonlinear sensitivity analysis. In R. Drenick, F. Kozin, (eds): System Modeling and Optimization: Proc. IFIP,

Springer, 1982.

PDF.

[First application of backpropagation [BP1] to neural networks. Extending preliminary thoughts in his 1974 thesis.]

[BP4] J. Schmidhuber.

Who invented backpropagation?

More

[DL2].

[R7] Reddit/ML, 2019. J. Schmidhuber on Seppo Linnainmaa, inventor of backpropagation in 1970.

[R4] Reddit/ML, 2019. Five major deep learning papers by G. Hinton did not cite similar earlier work by J. Schmidhuber.

[MC43]

W. S. McCulloch, W. Pitts. A Logical Calculus of Ideas Immanent in Nervous Activity.

Bulletin of Mathematical Biophysics, Vol. 5, p. 115-133, 1943.

[K56]

S.C. Kleene. Representation of Events in Nerve Nets and Finite Automata. Automata Studies, Editors: C.E. Shannon and J. McCarthy, Princeton University Press, p. 3-42, Princeton, N.J., 1956.

[L20]

W. Lenz (1920). Beiträge zum Verständnis der magnetischen

Eigenschaften in festen Körpern. Physikalische Zeitschrift, 21:

613-615.

[I25]

E. Ising (1925). Beitrag zur Theorie des Ferromagnetismus. Z. Phys., 31 (1): 253-258, 1925.

[K41]

H. A. Kramers and G. H. Wannier (1941). Statistics of the Two-Dimensional Ferromagnet. Phys. Rev. 60, 252 and 263, 1941.

[W45]

G. H. Wannier (1945).

The Statistical Problem in Cooperative Phenomena.

Rev. Mod. Phys. 17, 50.

.