History of computer vision contests won by deep CNNs on GPU

Jürgen Schmidhuber (pronounce: you_again shmidhoobuh) Modern computer vision since 2011 relies on deep convolutional neural networks (CNNs) [4] efficiently implemented [18b] on massively parallel graphics processing units (GPUs). Table 1 below lists important international computer vision competitions (with official submission deadlines) won by deep GPU-CNNs, ordered by date, with a focus on those contests that brought "Deep Learning Firsts" and/or major improvements over previous best or second best:

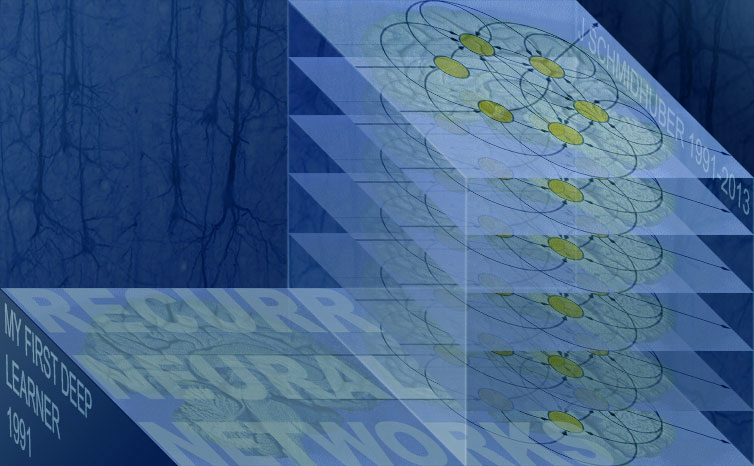

Background: GPUs were originally developed for the video game industry. But they can also be used to speed up artificial neural networks (NNs), as shown in 2004 [1]. Nevertheless, until 2010, many researchers thought that one cannot train deep NNs by plain backpropagation, the popular technique published in 1970 [5, 5a-c, 6]. Why not? Because of the fundamental deep learning problem identified in 1991 by my very first student Sepp Hochreiter [2c]: In typical deep or recurrent networks, back-propagated error signals either grow or decay exponentially in the number of layers. That's why many scientists thought that NNs have to be pre-trained by unsupervised learning - something that I did first for general purpose deep recurrent NNs in 1991 (my first very deep learner) [2,2a], and that others did for less general feedforward NNs in 2006 [2b] (in 2008 also on GPU [1b]). In 2010, however, our team at IDSIA (Dan Ciresan et al [18]) showed that GPUs can be used to train deep standard supervised NNs by plain backpropagation [5], achieving a speedup of 50 over CPUs, and breaking the long-standing famous MNIST benchmark record [18] (using pattern distortions [15b]). This really was all about GPUs - no novel NN techniques were necessary, no unsupervised pre-training, only decades-old stuff. One of the reviewers called this a "wake-up call" to the machine learning community, which quickly adopted the method. In 2011, we extended [18b-d] this approach to convolutional NNs (CNNs) [4,1a], making GPU-CNNs 60 times faster [18b] than CPU-based CNNs. This became the basis for a whole series of victories in computer vision contests (see Table 1), which attracted enormous interest from industry since 2011. Today, the world's most famous IT companies are heavily using this technique.

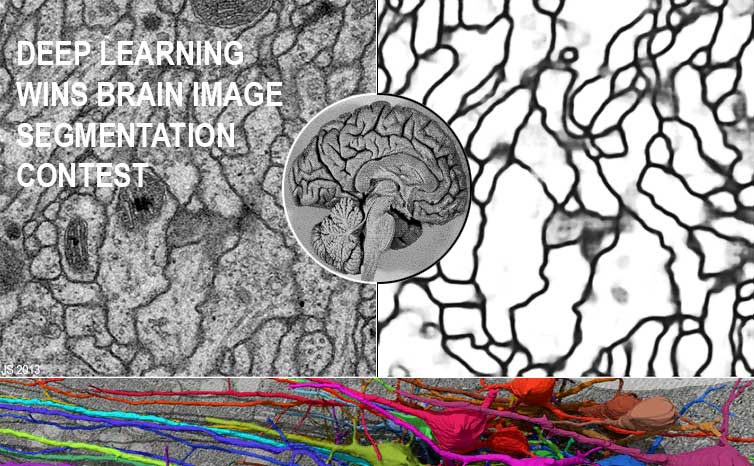

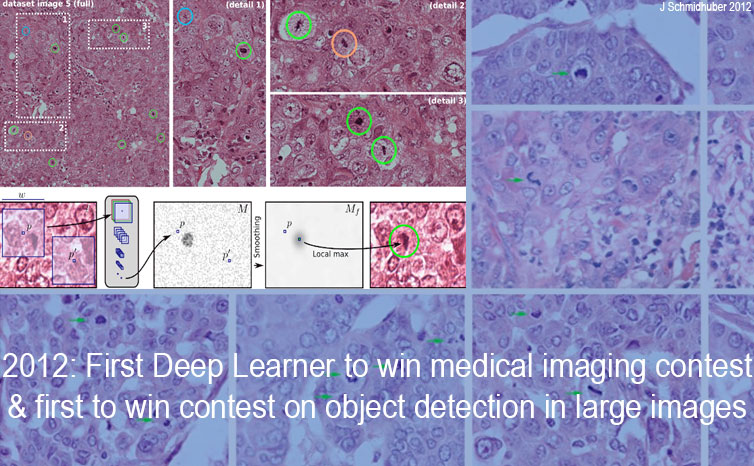

This attracted a lot of industry attention - it became clear that this was the way forward in computer vision. In particular, Apple hired one of our award-winning team. (Some people think that Apple came late to the deep learning GPU-CNN party, but no, they got active as soon as this became commercially relevant.) Less than 3 months later, in August 2011 in Silicon Valley, our ensemble of GPU-CNNs achieved the first superhuman pattern recognition result in the history of computer vision [18c-d,19]. Our system was twice better than humans, three times better than the closest artificial competitor (from NYU), and six times better than the best non-neural method. And then it kept winning those contests with larger and larger images, as shown in Table 1 (compare Kurzweil AI interview of 2012). Table 1 also reflects that IDSIA's team had the first neural network to win an image segmentation contest (Mar 2012) [20d,20d+], the first NN to win a contest on object detection in large images (10 Sep 2012) [20a,c], the first to win medical imaging contests in general, and the first to win cancer detection contests in particular (Mitosis Detection in Breast Cancer Histological Images, 2012 & 2013) [20a-c]. Our fast CNN image scanners were over 1000 times faster than previous methods [20e]. Today, many startups as well as established companies such as IBM & Google are using such deep GPU-CNNs for healthcare applications (note that healthcare makes up 10% of the world's GDP). We did not participate in ImageNet competitions, focusing instead on challenging contests with larger images (ISBI 2012, ICPR 2012, MICCAI 2013, see Table 1). However, Univ. Toronto [19b] pointed out that their ImageNet 2012 winner (see Table 1) is similar to our IJCNN 2011 winner [19], and Microsoft's ImageNet 2015 winner [12] of Dec 2015 (Table 1) uses the principle of our "highway networks" [11] of May 2015, the first very deep feedforward networks with hundreds of layers, based on the LSTM principle [8]. (Table 1 does not list contests won through combinations of CNNs and other techniques such as Support Vector Machines and Bag of Features, e.g., the 2009 TRECVID competitions [21, 22]. It also does not include benchmark records broken outside of contests with concrete deadlines, e.g., [19].) We never needed any of the popular NN regularisers, which tend to improve error rates by at most a few percent, which pales against the dramatic improvements brought by sheer GPU computing power. We used the GPUs of NVIDIA, which rebranded itself as a deep learning company during the period covered by the competitions in Table 1. BTW, thanks to NVIDIA and its CEO Jensen H. Huang (see image above) for our 2016 NN Pioneers of AI Award, and for generously funding our research! Most of the major IT companies such as Facebook are now using such deep GPU-CNNs for image recognition and a multitude of other applications [22]. Arcelor Mittal, the world's largest steel maker, worked with us to greatly improve steel defect detection [3].

We are proud that our deep learning methods developed since 1991 have transformed machine learning and Artificial Intelligence (AI), and are now available to billions of users through the world's four most valuable public companies: Apple (#1 as of March 31, 2017), Google (Alphabet, #2), Microsoft (#3), and Amazon (#4). References[1] Oh, K.-S. and Jung, K. (2004). GPU implementation of neural networks. Pattern Recognition, 37(6):1311-1314. [Speeding up traditional NNs on GPU by a factor of 20.] [1a] K. Chellapilla, S. Puri, P. Simard. High performance convolutional neural networks for document processing. International Workshop on Frontiers in Handwriting Recognition, 2006. [Speeding up shallow CNNs on GPU by a relatively small factor of 4.] [1b] Raina, R., Madhavan, A., and Ng, A. (2009). Large-scale deep unsupervised learning using graphics processors. In Proceedings of the 26th Annual International Conference on Machine Learning (ICML), pages 873-880. ACM. Based on a NIPS 2008 workshop paper. [2] Schmidhuber, J. (1992). Learning complex, extended sequences using the principle of history compression. Neural Computation, 4(2):234-242. Based on TR FKI-148-91, TUM, 1991. More. [2a] J. Schmidhuber. Habilitation thesis, TUM, 1993. PDF. An ancient experiment with credit assignment across 1200 time steps or virtual layers and unsupervised pre-training for a stack of recurrent NNs can be found here. [2b] G. E. Hinton, R. R. Salakhutdinov. Reducing the dimensionality of data with neural networks. Science, Vol. 313. no. 5786, pp. 504 - 507, 2006. [2c] S. Hochreiter. Untersuchungen zu dynamischen neuronalen Netzen. Diploma thesis, TU Munich, in J. Schmidhuber's lab, 1991. [3] J. Masci, U. Meier, D. Ciresan, G. Fricout, J. Schmidhuber. Steel Defect Classification with Max-Pooling Convolutional Neural Networks. Proc. IJCNN 2012. [4] Fukushima's CNN architecture [13] (1979) (with Max-Pooling [14], 1993) is trained [6] in the shift-invariant 1D case [15a] or 2D case [15, 16, 17] by Linnainmaa's automatic differentiation or backpropagation algorithm of 1970 [5] (extending earlier work in control theory [5a-c]). [5] Linnainmaa, S. (1970). The representation of the cumulative rounding error of an algorithm as a Taylor expansion of the local rounding errors. Master's thesis, Univ. Helsinki. (See also BIT Numerical Mathematics, 16(2):146-160, 1976.) [5a] Kelley, H. J. (1960). Gradient theory of optimal flight paths. ARS Journal, 30(10):947-954. [5b] Bryson, A. E. (1961). A gradient method for optimizing multi-stage allocation processes. In Proc. Harvard Univ. Symposium on digital computers and their applications. [5c] Dreyfus, S. E. (1962). The numerical solution of variational problems. Journal of Mathematical Analysis and Applications, 5(1):30-45. [6] Werbos, P. J. (1982). Applications of advances in nonlinear sensitivity analysis. In Proceedings of the 10th IFIP Conference, 31.8 - 4.9, NYC, pp. 762-770. (Extending thoughts in his 1974 thesis.) [8] Hochreiter, S. and Schmidhuber, J. (1997). Long Short-Term Memory. Neural Computation, 9(8):1735-1780. Based on TR FKI-207-95, TUM (1995). More. [9] Gers, F. A., Schmidhuber, J., and Cummins, F. (2000). Learning to forget: Continual prediction with LSTM. Neural Computation, 12(10):2451-2471. [10] Graves, A., Fernandez, S., Gomez, F. J., and Schmidhuber, J. (2006). Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural nets. Proc. ICML'06, pp. 369-376. [10a] A. Graves, M. Liwicki, S. Fernandez, R. Bertolami, H. Bunke, J. Schmidhuber. A Novel Connectionist System for Improved Unconstrained Handwriting Recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 31, no. 5, 2009. [11] Srivastava, R. K., Greff, K., Schmidhuber, J. Highway networks. Preprints arXiv:1505.00387 (May 2015) and arXiv:1507.06228 (Jul 2015). Also at NIPS'2015. The first working very deep feedforward nets with over 100 layers. Let g, t, h, denote non-linear differentiable functions. Each non-input layer of a highway net computes g(x)x + t(x)h(x), where x is the data from the previous layer. (Like LSTM [8] with forget gates [9] for RNNs.) Resnets [12] are a special case of this where g(x)=t(x)=const=1.

[13] K. Fukushima. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biological Cybernetics, 36(4): 193-202, 1980. Scholarpedia. [14] Weng, J., Ahuja, N., and Huang, T. S. (1993). Learning recognition and segmentation of 3-D objects from 2-D images. Proc. 4th Intl. Conf. Computer Vision, Berlin, Germany, pp. 121-128. [15b] Baird, H. (1990). Document image defect models. In Proc. IAPR Workshop on Syntactic and Structural Pattern Recognition, Murray Hill, NJ. [15a] A. Waibel, T. Hanazawa, G. Hinton, K. Shikano, K. J. Lang. Phoneme Recognition using Time-Delay Neural Networks. ATR Tech report, 1987. (Also in IEEE TNN, 1989.) [15] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. Hubbard, L. D. Jackel: Backpropagation Applied to Handwritten Zip Code Recognition, Neural Computation, 1(4):541-551, 1989. [16] M. A. Ranzato, Y. LeCun: A Sparse and Locally Shift Invariant Feature Extractor Applied to Document Images. Proc. ICDAR, 2007 [17] D. Scherer, A. Mueller, S. Behnke. Evaluation of pooling operations in convolutional architectures for object recognition. In Proc. ICANN 2010. [18] Ciresan, D. C., Meier, U., Gambardella, L. M., and Schmidhuber, J. (2010). Deep big simple neural nets for handwritten digit recognition. Neural Computation, 22(12):3207-3220. [18b] D. C. Ciresan, U. Meier, J. Masci, L. M. Gambardella, J. Schmidhuber. Flexible, High Performance Convolutional Neural Networks for Image Classification. International Joint Conference on Artificial Intelligence (IJCAI-2011, Barcelona), 2011. [Speeding up deep CNNs on GPU by a factor of 60. Basis of all our computer vision contest winners since 2011.] [18c] D. C. Ciresan, U. Meier, J. Masci, J. Schmidhuber. A Committee of Neural Networks for Traffic Sign Classification. International Joint Conference on Neural Networks (IJCNN-2011, San Francisco), 2011. [18d] Results of 2011 IJCNN traffic sign recognition contest [18e] Results of 2011 ICDAR Chinese handwriting recognition competition: WWW site, PDF. [19] Ciresan, D. C., Meier, U., and Schmidhuber, J. (2012c). Multi-column deep neural networks for image classification. Proc. CVPR, June 2012. Long preprint arXiv:1202.2745v1 [cs.CV], February 2012. [19b] A. Krizhevsky, I. Sutskever, G. E. Hinton. ImageNet Classification with Deep Convolutional Neural Networks. NIPS 25, MIT Press, December 2012. [20a] Results of 2012 ICPR cancer detection contest [20b] Results of 2013 MICCAI Grand Challenge (cancer detection) [20c] D. C. Ciresan, A. Giusti, L. M. Gambardella, J. Schmidhuber. Mitosis Detection in Breast Cancer Histology Images using Deep Neural Networks. MICCAI 2013. [20d] D. Ciresan, A. Giusti, L. Gambardella, J. Schmidhuber. Deep Neural Networks Segment Neuronal Membranes in Electron Microscopy Images. NIPS 2012, Lake Tahoe, 2012. [20d+] I. Arganda-Carreras, S. C. Turaga, D. R. Berger, D. Ciresan, A. Giusti, L. M. Gambardella, J. Schmidhuber, D. Laptev, S. Dwivedi, J. M. Buhmann, T. Liu, M. Seyedhosseini, T. Tasdizen, L. Kamentsky, R. Burget, V. Uher, X. Tan, C. Sun, T. Pham, E. Bas, M. G. Uzunbas, A. Cardona, J. Schindelin, H. S. Seung. Crowdsourcing the creation of image segmentation algorithms for connectomics. Front. Neuroanatomy, November 2015.

[21] Ji, S., Xu, W., Yang, M., and Yu, K. (2013). 3D convolutional neural networks for human action recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(1):221-231.

[23] M. Stollenga, W. Byeon, M. Liwicki, J. Schmidhuber. Parallel Multi-Dimensional LSTM, with Application to Fast Biomedical Volumetric Image Segmentation. NIPS 2015; arxiv:1506.07452.

|