|

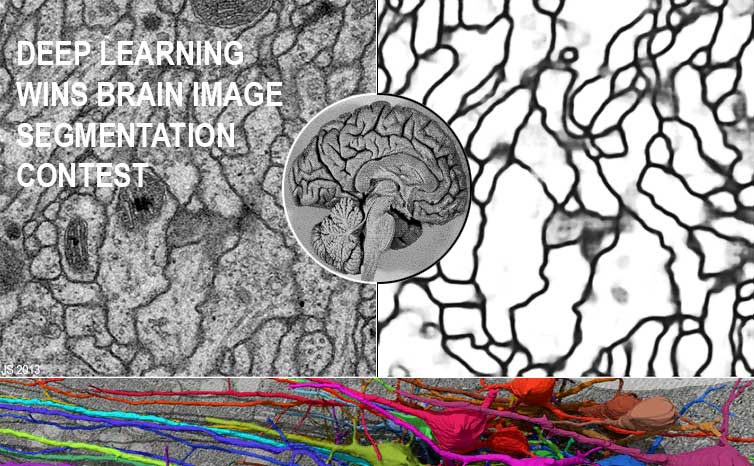

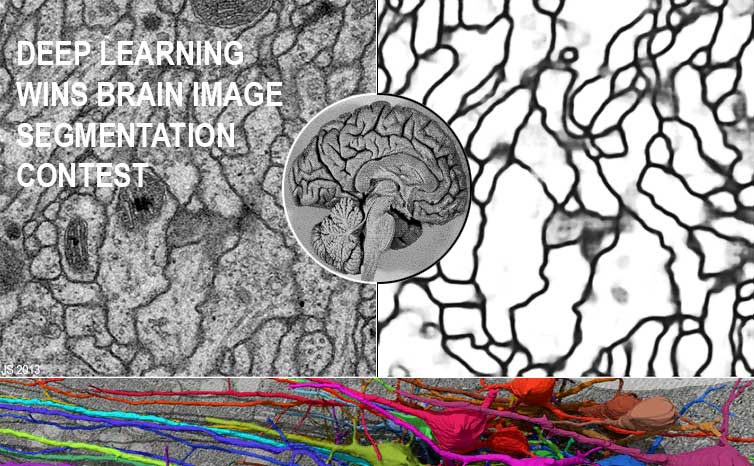

Deep Learning Wins 2012 Brain Image Segmentation Contest

(first Deep Learner to win a pure image segmentation competition)

Jürgen Schmidhuber

Our Deep Learning Neural Networks (deep NN) won the ISBI'12 Challenge on segmenting neuronal structures [2], through the work of Dan Claudiu Ciresan and Alessandro Giusti - see recent NIPS paper [1].

To our knowledge, this was the first image segmentation competition (with secret test set) won by a feedforward Deep Learner.

(It should be mentioned, however, that

our LSTM RNN already performed simultaneous segmentation and recognition when they became the

first Deep Leaners to win official international pattern recognition contests - in connected handwriting at ICDAR 2009 [13].)

This is relevant for the recently approved huge brain projects in Europe and the US. Given electron microscopy images of stacks of thin slices of animal brains, the goal is to build a detailed 3D model of the brain's neurons and dendrites. But human experts need many hours and days and weeks to annotate the images: Which parts depict neuronal membranes? Which parts are irrelevant background? This needs to be automated. Our deep NN learned to solve this task through experience with many training images. They won the contest on all three evaluation metrics by a large margin, with superhuman performance in terms of pixel error. (Ranks 2-6: for researchers at ETHZ, MIT, CMU, Harvard.)

It is ironic that artificial NN (ANN) can help to better understand biological NN (BNN). And in more than one way - on my

2013 lecture tour

I kept trying to convince neuroscientists that feature detectors invented by our deep visual ANN are highly predictive of what they will find in BNN once they have figured out how to measure synapse strengths. While the visual cortex of BNN may use a quite different learning algorithm, its objective function to be minimised must be similar to our ANN's.

We applied [6,6a] pure supervised gradient descent (40-year-old efficient reverse mode backpropagation, e.g., [3a,3]) to our deep and wide GPU-based multi-column max-pooling convolutional networks (MC GPU-MPCNN) [4,5] with alternating weight-sharing convolutional layers [8,6] and max-pooling layers [9,6a,10] of winner-take-all units [8] (over two decades, LeCun's lab has invented many improvements of such CNN). Plus a few additional tricks [1,4,5,11]. Our architecture is biologically rather plausible, inspired by early neuroscience-related work on so-called simple cells and complex cells [7,8].

Already in 2011, our MC GPU-MPCNN became the first artificial device to achieve human-competitive performance on major benchmarks [5,12,13]. Most if not all leading IT companies and research labs are now using (or trying to use) our combination of techniques, too.

When we started Deep Learning research over two decades ago [11,14], slow computers forced us to focus on toy applications. Today, our deep NN can already learn to rival human pattern recognisers in important domains [12,13]. And this is just the beginning - each decade we gain another factor of 100-1000 in terms of

raw computational power per cent.

Credit for parts of the image: Daniel Berger, 2012

References

[1] D. Ciresan, A. Giusti, L. Gambardella, J. Schmidhuber.

Deep Neural Networks Segment Neuronal Membranes in Electron Microscopy Images.

In Advances in Neural Information Processing Systems (NIPS 2012), Lake Tahoe,

2012. PDF.

[2]

Segmentation of neuronal structures in EM stacks challenge - ISBI 2012

[3] Paul J. Werbos. Applications of advances in nonlinear sensitivity analysis. In R. Drenick, F. Kozin, (eds): System Modeling and Optimization: Proc. IFIP (1981), Springer, 1982.

[3a] S. Linnainmaa. The representation of the cumulative rounding error of an algorithm as a Taylor expansion of the local rounding errors. Master's Thesis (in Finnish), Univ. Helsinki, 1970. See chapters 6-7 and FORTRAN code on pages 58-60.

[4] D. C. Ciresan, U. Meier, J. Masci, L. M. Gambardella, J. Schmidhuber. Flexible, High Performance Convolutional Neural Networks for Image Classification. International Joint Conference on Artificial Intelligence (IJCAI-2011, Barcelona), 2011. PDF. ArXiv preprint.

[5] D. C. Ciresan, U. Meier, J. Schmidhuber. Multi-column Deep Neural Networks for Image Classification. Proc. IEEE Conf. on Computer Vision and Pattern Recognition CVPR 2012, p 3642-3649, 2012. PDF. Longer TR: arXiv:1202.2745v1 [cs.CV]

[6] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. Hubbard, L. D. Jackel: Backpropagation Applied to Handwritten Zip Code Recognition, Neural Computation, 1(4):541-551, 1989.

[6a]

M. Ranzato, F.J. Huang, Y. Boureau, Y. LeCun. Unsupervised Learning of Invariant Feature Hierarchies with Applications to Object Recognition. Proc. CVPR 2007, Minneapolis, 2007.

[7] Hubel, D. H., T. N. Wiesel. Receptive Fields, Binocular Interaction And Functional Architecture In The Cat's Visual Cortex. Journal of Physiology, 1962.

[8] K. Fukushima. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biological Cybernetics, 36(4): 193-202, 1980.

Scholarpedia.

[9] M. Riesenhuber, T. Poggio. Hierarchical models of object recognition in cortex. Nature Neuroscience 11, p 1019-1025, 1999.

[10] D. Scherer, A. Mueller, S. Behnke. Evaluation of pooling operations in convolutional architectures for object recognition. In Proc. ICANN 2010.

[11] J. Schmidhuber. My first Deep Learner of 1991 + Deep Learning timeline 1962-2013

[12] J. Schmidhuber. 2011: First Superhuman Visual Pattern Recognition

[13] J. Schmidhuber. 2009: First official international pattern recognition contests won by Deep Learning (3 connected handwriting competitions at ICDAR 2009 won through LSTM RNN performing simultaneous segmentation and recognition); 2011: first human-competitive recognition of handwritten digits

[14] J. Schmidhuber. Deep Learning since 1991

.

|