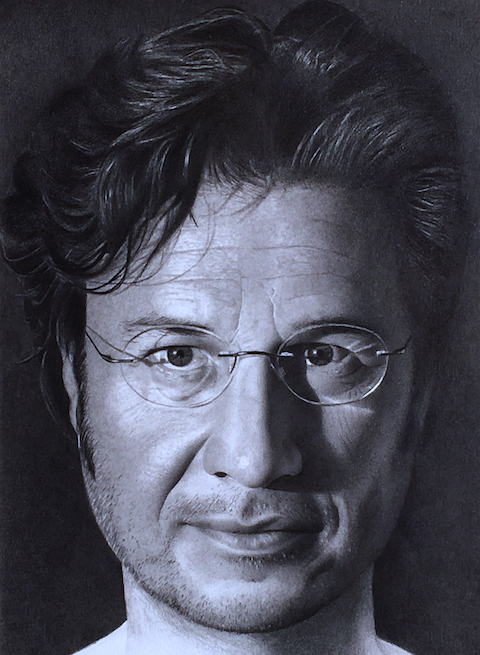

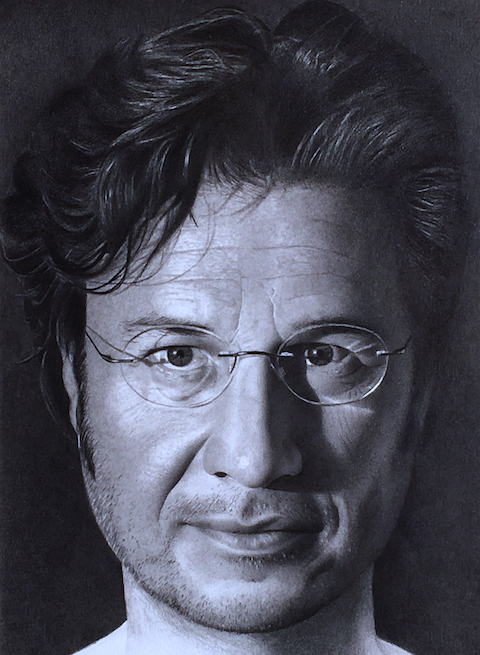

Marco Zaffalon

(Drawn in pencil; see the author.)

Scientific Director

Istituto "Dalle Molle" di Studi sull'Intelligenza Artificiale (IDSIA)

Polo universitario Lugano

Via la Santa 1

CH-6962 Lugano, Switzerland

Tel.: +41 (0)58 666 666 5

Fax: +41 (0)58 666 666 1

E-mail:

.

Dblp: 08/4633.

Orcid: 0000-0001-8908-1502.

Scholar: ECEcK4cAAAAJ.

Scopus: 6602149061.

Semanticscholar: 1825163.

Last update: February 29, 2024.

Science. There are three stages in scientific discovery: first, people deny that it is true, then they deny that it is important; finally they credit the wrong person. (Alexander Von Humboldt)

Art. This fellow has a problem ... he considers boredom an Art. (Charles Bukowski)

Ethics. An ethicist is someone who sees something wrong with whatever you have in mind. (Marvin Minsky)

Love. A love relation's stages: don't ever change; you should change; you have changed. (Anonymous)

Naive realism. Have you ever noticed that anybody driving slower than you is an idiot, and anyone going faster than you is a maniac? (George Carlin)

Religion. Thanks to god for making me an atheist. (Ricky Gervais)

ChatGPT. The "I" in LLM stands for intelligence. (Daniel Stenberg)

Hanlon's razor. Don't ascribe to malice what can be plainly explained with incompetence. (Robert J. Hanlon)

My point of view on the state of Artificial Intelligence in Ticino (in Italian) as of July 8, 2020. Debating on similar issues with some nice guests (still in Italian) on October 15, 2021.

Biographical note

- M.Sc. degree cum laude in Computer Science in 1991 and Ph.D. degree in Applied Mathematics in 1997, both from Università

degli Studi di Milano, Italy.

- At IDSIA since 1997. Founder and head of the Imprecise Probability Group.

- Co-chair of the conferences ISIPTA

'03, ISIPTA '07, and chair of the 1st SIPTA School on Imprecise Probabilities.

- Formerly: founding Member, Secretary, and President of SIPTA.

- Senior Area Editor for imprecise probabilities at IJAR since 2018 (Area Editor during 2005–2017).

- SUPSI Professor since 2009.

- Lecturer: Causal Inference, 2018–2020 [book: Primer; slides: all (revised May 2023)]; Uncertain Reasoning and Data Mining, 2008–2019; Probability and Statistics, 2005–2009.

- Program Committee Member for several meetings, including: AAAI, CLearR, ECAI, ECMLPKDD, IPMU, ISIPTA, IJCAI, NeurIPS, PGM, SMPS, UAI, WHY.

Senior Program Committee Member for IJCAI 2021–2023, UAI 2021–2023, AAAI 2022–2023.

- Scientific Director of IDSIA since 2019.

- Co-founder and Chief Scientist of Artificialy since 2020.

- Top 2% scientist according to Stanford's science-wide author databases of standardised citation indicators (2019–2022).

Papers

Writing. I am writing a longer letter than usual because there is not enough time to write a short one. (Blaise Pascal)

- Antonucci, A., Piqué, G., Zaffalon, M. (2024). Zero-shot causal graph extrapolation from text via LLMs. In XAI4Sci-24 @ AAAI 2024.

- Zaffalon, M., Antonucci, A., Cabañas, R., Huber, D., Azzimonti, D. (2024). Efficient computation of counterfactual bounds. International Journal of Approximate Reasoning tbd, 109111.

- Benavoli, A., Facchini, A., Zaffalon, M. (2023). Closure operators, classifiers and desirability. In Quaeghebeur, E., Miranda, E., Montes, I., Vantaggi, B. (Eds), ISIPTA '23. PMLR 215, JMLR.org, 25–36.

- Casanova, A., Benavoli, A., Zaffalon, M. (2023). Nonlinear desirability as a linear classification problem. International Journal of Approximate Reasoning 152, 1–32.

- Huber, D., Chen, Y., Antonucci, A., Darwiche, A. and Zaffalon, M. (2023). Tractable bounding of counterfactual queries by knowledge compilation. In TPM-23 @ UAI 2023.

- Miranda, E., Zaffalon, M. (2023). Nonlinear desirability theory. International Journal of Approximate Reasoning 154, 176–199.

- Schürch, M., Azzimonti, D., Benavoli, A., Zaffalon, M. (2023). Correlated product of experts for sparse Gaussian process regression. Machine Learning 112, 1411–1432.

- Zaffalon, M., Antonucci, A., Cabañas, R., Huber, D. (2023). Approximating counterfactual bounds while fusing observational, biased and randomised data sources. International Journal of Approximate Reasoning 162, 109023.

- Zaffalon, M., Antonucci, A., Szher, M. (2023). Solving the Allais paradox by counterfactual harm. In Quaeghebeur, E., Miranda, E., Montes, I., Vantaggi, B. (Eds), ISIPTA '23.

- Benavoli, A., Facchini, A., Zaffalon, M. (2022). Quantum indistinguishability through exchangeability. International Journal of Approximate Reasoning 151, 389–412.

- Casanova, A., Kohlas, J., Zaffalon, M. (2022). Information algebras in the theory of imprecise probabilities. International Journal of Approximate Reasoning 142, 383–416.

- Casanova, A., Kohlas, J., Zaffalon, M. (2022). Information algebras in the theory of imprecise probabilities, an extension. International Journal of Approximate Reasoning 150, 311–336.

- Zaffalon, M., Antonucci, A., Cabañas, R., Huber, D., Azzimonti, D. (2022). Bounding counterfactuals under selection bias. In Salmerón, A., Rumí, R. (Eds), PGM 2022. PMLR 186, JMLR.org, 289–300.

- Benavoli, A., Facchini, A., Zaffalon, M. (2021). Quantum indistinguishability through exchangeable desirable gambles. In de Bock, J., Cano, A., Miranda, E., Moral, S. (Eds), ISIPTA '21. PMLR 147, JMLR.org, 22–31.

- Benavoli, A., Facchini, A., Zaffalon, M. (2021). The weirdness theorem and the origin of quantum paradoxes. Foundations of Physics 51(5), 95.

- Casanova, A., Benavoli, A., Zaffalon, M. (2021). Nonlinear desirability as a linear classification problem. In de Bock, J., Cano, A., Miranda, E., Moral, S. (Eds), ISIPTA '21. PMLR 147, JMLR.org, 61–71.

- Casanova, A., Kohlas, J., Zaffalon, M. (2021). Algebras of sets and coherent sets of gambles. In Vejnarová, J., Wilson, N. (Eds), ECSQARU 2021. Lecture Notes in Artificial Intelligence 12897, Springer, 603–615.

- Casanova, A., Miranda, E., Zaffalon, M. (2021). Joint desirability foundations of social choice and opinion pooling. Annals of Mathematics and Artificial Intelligence 89(10–11), 965–1011.

- Corani, G., Benavoli, A., Zaffalon, M. (2021). Time series forecasting with Gaussian processes needs priors. In ECML PKDD 2021. Lecture notes in Computer Science 4, Springer, 103–117.

- Kohlas, J., Casanova, A., Zaffalon, M. (2021). Information algebras of coherent sets of gambles in general possibility spaces. In de Bock, J., Cano, A., Miranda, E., Moral, S. (Eds), ISIPTA '21. PMLR 147, JMLR.org, 191–200.

- Zaffalon, M., Miranda, E. (2021). Desirability foundations of robust rational decision making. Synthese 198(27), S6529–S6570 (published online in 2018).

- Zaffalon, M., Antonucci, A., Cabañas, R. (2021). Causal expectation-maximisation. In WHY-21 @ NeurIPS 2021.

- Zaffalon, M., Miranda, E. (2021). The sure thing. In de Bock, J., Cano, A., Miranda, E., Moral, S. (Eds), ISIPTA '21. PMLR 147, JMLR.org, 342–351.

- Cabañas, R., Antonucci, A., Huber, D., Zaffalon, M. (2020). CREDICI: a Java library for causal inference by credal networks. In Jaeger, M., Nielsen, T. D. (Eds), PGM 2020. PMLR 138, JMLR.org, 597–600.

- Casanova, A., Miranda, E., Zaffalon, M. (2020). Social pooling of beliefs and values with desirability. In FLAIRS-33. AAAI, 569–574.

- Corani, G., Azzimonti, G., Augusto, J., Zaffalon, M. (2020). Probabilistic reconciliation of hierarchical forecast via Bayes' rule. In Hutter, F., Kersting, K., Lijffijt, J., Valera, I. (Eds), ECML PKDD 2020. Lecture notes in Computer Science 12459. Springer, 211–226.

- Huber, D., Cabañas, R., Antonucci, A., Zaffalon, M. (2020). CREMA: a Java library for credal network inference. In Jaeger, M., Nielsen, T. D. (Eds), PGM 2020. PMLR 138, JMLR.org, 613–616.

- Kern, H., Corani, G., Huber, D., Vermes, N., Zaffalon, M., Varini, M., Wenzel, C., Fringer, A. (2020). Impact on place of death in cancer patients: a causal exploration in southern Switzerland. BMC Palliative Care 19, 160.

- Miranda, E., Zaffalon, M. (2020). Compatibility, desirability, and the running intersection property. Artificial Intelligence 283, 103274.

- Schürch, M., Azzimonti, D., Benavoli, A., Zaffalon, M. (2020). Recursive estimation for sparse Gaussian process regression. Automatica 120, 109127.

- Yoo, J., Kang, U, Scanagatta, M., Corani, G., Zaffalon, M. (2020). Sampling subgraphs with guaranteed treewidth for accurate and efficient graphical inference. In Caverlee, J., Hu, X., Lalmas, M., Wang, W. (Eds), WSDM '20. ACM, 708–716.

- Zaffalon, M., Antonucci, A., Cabañas, R. (2020). Structural causal models are (solvable by) credal networks. In Jaeger, M., Nielsen, T. D. (Eds), PGM 2020. PMLR 138, JMLR.org, 581–592.

- Azzimonti, L., Corani, G., Zaffalon, M. (2019). Hierarchical estimation of parameters in Bayesian networks. Computational Statistics and Data Analysis 137, 67–91.

- Benavoli, A., Facchini, A., Piga, D., Zaffalon, M. (2019). Sum-of-squares for bounded rationality. International Journal of Approximate Reasoning 105, 130–152.

- Benavoli, A., Facchini, A., Zaffalon, M. (2019). Bernstein's socks, polynomial-time provable coherence and entanglement. In de Bock, J., de Campos, C., de Cooman, G., Quaeghebeur, E., Wheeler, G. (Eds), ISIPTA '19. PMLR 103, JMLR.org, 23–31.

- Miranda, E., Zaffalon, M. (2019). Compatibility, coherence and the RIP. In Destercke, S., Denoeux, T., Gil, M. A., Grzegorzewski, P., Hryniewicz, O. (Eds), SMPS 2018, Uncertainty Modelling in Data Science, Advances in Intelligent and Soft Computing 832, Springer, 166–174.

- De Campos, C. P., Scanagatta, M., Corani, G., Zaffalon, M. (2018). Entropy-based pruning for learning Bayesian networks using BIC. Artificial Intelligence 260, 42–50.

- Scanagatta, M., Corani, G., de Campos, C. P., Zaffalon, M. (2018). Approximate structure learning for large Bayesian networks. Machine Learning 107(8–10), 1209–1227.

- Scanagatta, M., Corani, G., Zaffalon, M., Yoo, J., Kang, U (2018). Efficient learning of bounded-treewidth Bayesian networks from complete and incomplete data sets. International Journal of Approximate Reasoning 95, 152–166.

- Azzimonti, L., Corani, G., Zaffalon, M. (2017). Hierarchical multinomial-Dirichlet model for the estimation of conditional probability tables. In Raghavan, V., Aluru, S., Karypis, G., Miele, L., Wu, X. (Eds), ICDM 2017, IEEE, 739–744.

- Benavoli, A., Corani, G., Demšar, J., Zaffalon, M. (2017). Time for a change: a tutorial for comparing multiple classifiers through Bayesian analysis. Journal of Machine Learning Research 18(37), 1–36.

- Benavoli, A., Facchini, A., Piga, D., Zaffalon, M. (2017). SOS for bounded rationality. In Antonucci, A., Corani, G., Couso, I., Destercke, S. (Eds), ISIPTA '17. PMLR 62, JMLR.org, 25–36.

- Benavoli, A., Facchini, A., Zaffalon, M. (2017). A Gleason-type theorem for any dimension based on a gambling formulation of quantum mechanics. Foundations of Physics 47(7), 991–1002.

- Benavoli, A., Facchini, A., Zaffalon, M., Vicente-Pérez, J. (2017). A polarity theory for sets of desirable gambles. In Antonucci, A., Corani, G., Couso, I., Destercke, S. (Eds), ISIPTA '17. PMLR 62, JMLR.org, 37–48.

- Corani, G., Benavoli, A., Demšar, J., Mangili, F., Zaffalon, M. (2017). Statistical comparison of classifiers through Bayesian hierarchical modelling. Machine Learning 106(11), 1817–1837.

- Miranda, E., Zaffalon, M. (2017). Full conglomerability. Journal of Statistical Theory and Practice 11(4), 634–669.

- Miranda, E., Zaffalon, M. (2017). Full conglomerability, continuity and marginal extension. In Ferraro, M. B., Giordani, P., Vantaggi, B., Gagolewski, M., Gil, M. A., Grzegorzewski, P., Hryniewicz, O. (Eds), SMPS 2016, Soft Methods for Data Science, Advances in Intelligent and Soft Computing 456, Springer, 355–362.

- Scanagatta, M., Corani, G., Zaffalon, M. (2017). Improved local search in Bayesian networks structure learning. In Hyttinen, A., Malone, B., Suzuki, J. (Eds), AMBN 2017. PMLR 73, JMLR.org, 45–56.

- Zaffalon, M., Miranda, E. (2017). Axiomatising incomplete preferences through sets of desirable gambles. Journal of Artificial Intelligence Research 60, 1057–1126.

- Benavoli, A., Facchini, A., Zaffalon, M. (2016). Quantum mechanics: the Bayesian theory generalised to the space of Hermitian matrices. Physical Review A 94, 042106.

- Benavoli, A., Facchini, A., Zaffalon, M. (2016). Quantum rational preferences and desirability. In Proceedings of the 1st International Workshop on Imperfect Decision Makers: Admitting Real-World Rationality @ NIPS 2016, PMLR 58, JMLR.org, 87–96.

- De Campos, C. P., Corani, G., Scanagatta, M., Cuccu, M., Zaffalon, M. (2016). Learning extended tree augmented naive structures. International Journal of Approximate Reasoning 68, 153–163.

- Miranda, E., Zaffalon, M. (2016). Conformity and independence with coherent lower previsions. International Journal of Approximate Reasoning 78, 125–137.

- Rancoita, P. M. V., Zaffalon, M., Zucca, E., Bertoni, F., de Campos, C. P. (2016). Bayesian network data imputation with application to survival tree analysis. Computational Statistics and Data Analysis 93, 373–387.

- Scanagatta, M., Corani, G., de Campos, C. P., Zaffalon, M. (2016). Learning treewidth-bounded Bayesian networks with thousands of variables. In Lee, D. D., Sugiyama, M., Luxburg, U. V., Guyon, I., Garnett, R. (Eds), NIPS 2016, 1426–1470.

- Scanagatta, M., de Campos, C. P., Corani, G., Zaffalon, M. (2015). Learning Bayesian networks with thousands of variables. In Cortes, C., Lawrence, N. D., Lee, D. D., Sugiyama, M., Garnett, R. (Eds), NIPS 2015, Curran Associates, 1855–1863.

- Antonucci, A., de Campos, C. P., Huber, D., Zaffalon, M. (2015). Approximate credal network updating by linear programming with applications to decision making. International Journal of Approximate Reasoning 58, 25–38.

- Benavoli, A., Mangili, F., Corani, G., Zaffalon, M. (2015). A Bayesian nonparametric procedure for comparing algorithms. In Bach, F. R., Bleim, D. M. (Eds), ICML 2015. PMLR 37, JMLR.org, 1264–1272.

- Benavoli, A., Mangili, F., Ruggeri, F., Zaffalon, M. (2015). Imprecise Dirichlet process with application to the hypothesis test on the probability that X≤Y. Journal of Statistical Theory and Practice 9(3), 658–684.

- Benavoli, A., Zaffalon, M. (2015). Prior near ignorance for inferences in the k-parameter exponential family. Statistics 49(5), 1104–1140.

- Corani, G., Benavoli, A., Mangili, F., Zaffalon, M. (2015). Bayesian hypothesis testing in machine learning. In Bifet, A., May, M., Zadrozny, B., Gavaldà, R., Pedreschi, D., Bonchi, F., Cardoso, J. S., Spiliopoulou, M. (Eds), ECML PKDD 2015. Lecture notes in Computer Science 9286, Springer, 199–202.

- Mangili, F., Benavoli, A., de Campos C. P., Zaffalon, M. (2015). Reliable survival analysis based on the Dirichlet process. Biometrical Journal 57, 1002–1019.

- Miranda, E., Zaffalon, M. (2015). Conformity and independence with coherent lower previsions. In Augustin, T., Doria, S., Miranda, E., Quaeghebeur, E. (Eds), ISIPTA '15. SIPTA, 197–206.

- Miranda, E., Zaffalon, M. (2015). Independent products in infinite spaces. Journal of Mathematical Analysis and Applications 425, 460–488.

- Miranda, E., Zaffalon, M. (2015). On the problem of computing the conglomerable natural extension. International Journal of Approximate Reasoning 56(A), 1–27.

- Antonucci, A., de Campos, C. P., Zaffalon, M. (2014). Probabilistic graphical models. In Augustin, T., Coolen, F., de Cooman, G., Troffaes, M. (Eds), Introduction to Imprecise Probabilities, Wiley, chapter 9.

- Benavoli, A., Mangili, F., Corani, G., Zaffalon, M., Ruggeri, F. (2014). A Bayesian Wilcoxon signed-rank test based on the Dirichlet process. In ICML 2014. PMLR 32, JMLR.org, 1026–1034.

- De Campos, C. P., Cuccu, M., Corani, G., Zaffalon, M. (2014). Extended tree augmented naive classifier. In van der Gaag, L., Feelders, A. (Eds), PGM 2014. Lecture Notes in Computer Science 8754, Springer, 176–189.

- Corani, G., Abellán, J., Masegosa, A., Moral, S., Zaffalon, M. (2014). Classification. In Augustin, T., Coolen, F., de Cooman, G., Troffaes, M. (Eds), Introduction to Imprecise Probabilities, Wiley, chapter 10.

- Scanagatta, M., de Campos, C. P., Zaffalon, M. (2014). Min-BDeu and max-BDeu scores for learning Bayesian networks. In van der Gaag, L., Feelders, A. (Eds), PGM 2014. Lecture Notes in Computer Science 8754, Springer, 426–441.

- Zaffalon, M., Corani, G. (2014). Comments on "Imprecise probability models for learning multinomial distributions from data. Applications to learning credal networks" by Andrés R. Masegosa and Serafín Moral. International Journal of Approximate Reasoning 55(7), 1579–1600.

- Antonucci, A., de Campos, C. P., Huber, D., Zaffalon, M. (2013). Approximating credal network inferences by linear programming. In van der Gaag, L. C. (Ed.), ECSQARU 2013. Lecture Notes in Computer Science 7958, Springer, 13–25. Best paper award.

- Antonucci, A., Huber, D., Zaffalon, M., Luginbühl, P., Chapman, I., Ladouceur, R. (2013). CREDO: a military decision-support system based on credal networks. In FUSION 2013.

- Benavoli, A., Zaffalon, M. (2013). Density-ratio robustness in dynamic state estimation. Mechanical Systems and Signal Processing 37, 54–75.

- De Campos, C. P., Rancoita, P. M. V., Kwee, I., Zucca, E., Zaffalon, M., Bertoni, F. (2013). Discovering subgroups of patients from DNA copy number data using NMF on compacted matrices. PLoS ONE 8(11), e79720.

- Mauá, D. D., de Campos, C. P., Zaffalon, M. (2013). On the complexity of solving polytree-shaped limited memory influence diagrams with binary variables. Artificial Intelligence 205, 30–38.

- Miranda, E., Zaffalon, M. (2013). Computing the conglomerable natural extension. In Cozman, F., Denoeux, T., Destercke, S., Seidenfeld, T. (Eds), ISIPTA '13. SIPTA, 255–264.

- Miranda, E., Zaffalon, M. (2013). Conglomerable coherence. International Journal of Approximate Reasoning 54(9), 1322–1350.

- Miranda, E., Zaffalon, M. (2013). Conglomerable coherent lower previsions. In Kruse, R. Berthold, M. R., Moewes, C., Gil, M. A., Grzegorzewski, P., Hryniewicz, O. (Eds), SMPS 2012. Synergies of Soft Computing and Statistics for Intelligent Data Analysis, Advances in Intelligent and Soft Computing 190, Springer Berlin Heidelberg, 419–427.

- Zaffalon, M., Miranda, E. (2013). Probability and time. Artificial Intelligence 198, 1–51.

- Benavoli, A., Zaffalon, M. (2012). A model of prior ignorance for inferences in the one-parameter exponential family. Journal of Statistical Planning and Inference 142(7), 1960–1979.

- Corani, G., Antonucci, A., Zaffalon, M. (2012). Bayesian networks with imprecise probabilities: theory and application to classification. In Holmes, D. E., Jain, L. C. (Eds), Data Mining: Foundations and Intelligent Paradigms (Volume 1: Clustering, Association and Classification). Springer-Verlag, Berlin, 49–93.

- Mauá, D. D., de Campos, C. P., Zaffalon, M. (2012). Solving limited memory influence diagrams. Journal of Artificial Intelligence Research 44, 97–140.

- Mauá, D. D., de Campos, C. P., Zaffalon, M. (2012). The complexity of approximately solving influence diagrams. In de Freitas, N., Murphy, K. P. (Eds), UAI 2012, 604–613.

- Mauá, D. D., de Campos, C. P., Zaffalon, M. (2012). Updating credal networks is approximable in polynomial time. International Journal of Approximate Reasoning 53(8), 1183–1199.

- Miranda, E., Zaffalon, M., de Cooman, G. (2012). Conglomerable natural extension. International Journal of Approximate Reasoning 53(8), 1200–1227.

- Zaffalon, M., Corani, G., Mauá, D. D. (2012). Evaluating credal classifiers by utility-discounted predictive accuracy. International Journal of Approximate Reasoning 53(8), 1282–1301.

- Benavoli, A., Zaffalon, M. (2011). A discussion on learning and prior ignorance for sets of priors in

the one-parameter exponential family. In Coolen, F., de Cooman, G., Fetz, T., Oberguggenberger, M. (Eds), ISIPTA '11. SIPTA, 69–78.

- Benavoli, A., Zaffalon, M., Miranda, E. (2011). Robust filtering through coherent lower previsions. IEEE Transactions on Automatic Control 56(7), 1567–1581.

- De Cooman, G., Miranda, E., Zaffalon, M. (2011). Independent natural extension. Artificial Intelligence 175, 1911–1950.

- Mauá, D. D., de Campos, C. P., Zaffalon, M. (2011). A fully polynomial time approximation scheme for updating credal networks of bounded treewidth and number of variable states. In Coolen, F., de Cooman, G., Fetz, T., Oberguggenberger, M. (Eds), ISIPTA '11. SIPTA, 277–286.

- Miranda, E., Zaffalon, M., de Cooman, G. (2011). Conglomerable natural extension. In Coolen, F., de Cooman, G., Fetz, T., Oberguggenberger, M. (Eds), ISIPTA '11. SIPTA, 287–296.

- Zaffalon, M., Corani, G., Mauá, D. D. (2011). Utility-based accuracy measures to empirically evaluate credal classifiers. In Coolen, F., de Cooman, G., Fetz, T., Oberguggenberger, M. (Eds), ISIPTA '11. SIPTA, 401–410.

- Antonucci, A., Sun, Y., de Campos, C. P., Zaffalon, M. (2010). Generalized loopy 2U: a new algorithm for approximate inference in credal networks. International Journal of Approximate Reasoning 51(5), 474–484.

- De Cooman, G., Hermans, F., Antonucci, A., Zaffalon, M. (2010). Epistemic irrelevance in credal nets: the case of imprecise Markov trees. International Journal of Approximate Reasoning 51(9), 1029–1052.

- De Cooman, G., Miranda, E., Zaffalon, M. (2010). Factorisation properties of the strong product. In Borgelt, C., González Rodrìguez, G., Trutschnig, W., Asunción Lubiano, M., Gil, M. A., Grzegorzewski, P., Hryniewicz, O. (Eds), SMPS 2010, Combining Soft Computing and Statistical Methods in Data Analysis, Advances in Intelligent and Soft Computing 77, 139–147.

- De Cooman, G., Miranda, E., Zaffalon, M. (2010). Independent natural extension. In Hüllermeier, E., Kruse, R., Hoffmann, F. (Eds), IPMU 2010, Computational Intelligence for Knowledge-Based Systems Design. Lecture Notes in Computer Science 6178, Springer, 737–746.

- Miranda, E., Zaffalon, M. (2010). Conditional models: coherence and inference through sequences of joint mass functions. Journal of Statistical Planning and Inference 140(7), 1805–1833.

- Miranda, E., Zaffalon, M. (2010). Notes on desirability and conditional lower previsions. Annals of Mathematics and Artificial Intelligence. 60(3–4), 251–309.

- Pelessoni, R., Vicig, P., Zaffalon, M. (2010). Inference and risk measurement with the pari-mutuel model. International Journal of Approximate Reasoning 51(9), 1145–1158.

- Piatti, A., Antonucci, A., Zaffalon, M. (2010). Building knowledge-based systems by credal networks: a tutorial. In Baswell, A. R. (Ed), Advances in Mathematics Research 11, Nova Science Publishers, New York.

- Antonucci, A., Benavoli, A., Zaffalon, M., de Cooman, G., Hermans, F. (2009). Multiple model tracking by imprecise Markov trees. In FUSION 2009. IEEE, 1767–1774.

- Antonucci, A., Brühlmann, R., Piatti, A., Zaffalon, M. (2009). Credal networks for military identification problems. International Journal of Approximate Reasoning 50, 666–679.

- Benavoli, A., Zaffalon, M., Miranda, E. (2009). Reliable hidden Markov model filtering through coherent lower previsions. In FUSION 2009. IEEE, 1743–1750.

- Corani, G., Zaffalon, M. (2009). Lazy naive credal classifier. In U'09: Proceedings of the First ACM SIGKDD International Workshop on Knowledge Discovery from Uncertain Data. ACM, 30–37.

- De Cooman, G., Hermans, F., Antonucci, A., Zaffalon, M. (2009). Epistemic irrelevance in credal networks: the case of imprecise Markov trees. In Augustin, T., Coolen, F., Moral, S., Troffaes, M. C. M. (Eds), ISIPTA '09. SIPTA, 149–158.

- Miranda, E., Zaffalon, M. (2009). Coherence graphs. Artificial Intelligence 173, 104–144.

- Miranda, E., Zaffalon, M. (2009). Natural extension as a limit of regular extensions. In Augustin, T., Coolen, F., Moral, S., Troffaes, M. C. M. (Eds), ISIPTA '09. SIPTA, 327–336.

- Pelessoni, R., Vicig, P., Zaffalon, M. (2009). The pari-mutuel model. In Augustin, T., Coolen, F., Moral, S., Troffaes, M. C. M. (Eds), ISIPTA '09. SIPTA, 347–356.

- Piatti, A., Zaffalon, M., Trojani, F., Hutter, M. (2009). Limits of learning about a categorical latent variable under prior near-ignorance. International Journal of Approximate Reasoning 50, 597–611.

- Zaffalon, M., Miranda, E. (2009). Conservative inference rule for uncertain reasoning under incompleteness. Journal of Artificial Intelligence Research 34, 757–821.

- Antonucci, A., Zaffalon, M. (2008). Decision-theoretic specification of credal networks: a unified language for uncertain modeling with sets of Bayesian networks. International Journal of Approximate Reasoning 49(2), 345–361.

- Antonucci, A., Zaffalon, M., Sun, Y., de Campos, C. P. (2008). Generalized loopy 2U: a new algorithm for approximate inference in credal networks. In Jaeger, M., Nielsen, T. D. (Eds), PGM 2008. Hirtshals (Denmark), 17–24.

- Corani, G., Zaffalon, M. (2008). JNCC2, the implementation of naive credal classifier 2. Journal of Machine Learning Research 9, 2695–2698.

- Corani, G., Zaffalon, M. (2008). Credal model averaging: an extension of Bayesian model averaging to imprecise probabilities. In Daelemans, W., Goethals, B., Morik, K. (Eds), ECML PKDD 2008. Lecture notes in Computer Science 5211, Springer, 257–271.

- Corani, G., Zaffalon, M. (2008). Learning reliable classifiers from small or incomplete data sets: the naive credal classifier 2. Journal of Machine Learning Research 9, 581–621.

- Corani, G., Zaffalon, M. (2008). Naive credal classifier 2: an extension of naive Bayes for delivering robust classifications. In Stahlbock, R., Crone, S. F., Lessmann, S. (Eds), DMIN'08. CSREA Press, 84–90.

- Salvetti, A., Antonucci, A., Zaffalon, M. (2008). Spatially distributed identification of debris flow source areas by credal networks. In Sànchez-Marrè, M., Béjar, J., Comas, J., Rizzoli, A. E., Guariso, G. (Eds), iEMSs 2008, iEMSs, 380–387.

- Antonucci, A., Brühlmann, R., Piatti, A., Zaffalon, M. (2007). Credal networks for military identification problems. In de Cooman, G., Vejnarová, J., Zaffalon, M. (Eds), ISIPTA '07. Action M Agency, Prague (Czech Republic), 1–10.

- Antonucci, A., Piatti, A., Zaffalon, M. (2007). Credal networks for operational risk measurement and management. In Apolloni, B., Howlett, R. J., Jain, L. C., KES2007, Lectures Notes in Computer Science 4693, Springer, 604–611.

- Antonucci, A., Salvetti, A., Zaffalon, M. (2007). Credal networks for hazard assessment of debris flows. In Kropp, J., Scheffran, J. (Eds), Advanced Methods for Decision Making and Risk Management in

Sustainability Science. Nova Science Publishers, New York.

- Antonucci, A., Zaffalon, M. (2007). Fast

algorithms for robust classification with Bayesian nets. International Journal of Approximate Reasoning 44(3), 200–223.

- Miranda, E., Zaffalon, M. (2007). Coherence graphs. In de Cooman, G., Vejnarová, J., Zaffalon, M. (Eds), ISIPTA '07. Action M Agency, Prague (Czech Republic), 297–306.

- Piatti, A., Zaffalon, M., Trojani, F., Hutter, M. (2007). Learning about a categorical latent variable under prior near-ignorance. In de Cooman, G., Vejnarová, J., Zaffalon, M. (Eds), ISIPTA '07. Action M Agency, Prague (Czech Republic), 357–364.

- Vicig, P., Zaffalon, M., Cozman F. G. (2007). Notes on "Notes on conditional previsions". International Journal of Approximate Reasoning 44(3), 358–365.

- Antonucci, A., Zaffalon, M. (2006). Equivalence between Bayesian and credal nets on an updating problem. In Lawry, J., Miranda, E., Bugarin, A., Li, S., Gil, M. A., Grzegorzewski, P., Hryniewicz,

O. (Eds), SMPS 2006, Soft Methods for Integrated Uncertainty Modelling, Springer, 223–230.

- Antonucci, A., Zaffalon, M. (2006). Locally specified credal networks. In Studený, M., Vomlel, J. (Eds), PGM 2006, Action M Agency, Prague (Czech Republic), 25–34.

- Antonucci, A., Zaffalon, M., Ide, J. S., Cozman, F. G. (2006). Binarization algorithms for approximate updating in credal nets. In Penserini, L., Peppas, P., Perini, A. (Eds), STAIRS'06. IOS Press, Amsterdam (Netherlands), 120–131.

- Corani, G., Edgar, C., Marshall, I., Wesnes, K., Zaffalon, M. (2006). Classification of dementia types from cognitive profiles data. In ECML 2006. Lecture Notes in Computer Science 4213, Springer, Berlin, 470–477.

- Antonucci, A., Zaffalon, M. (2005). Fast algorithms for robust classification with Bayesian nets. In Cozman, F. G., Nau, R., Seidenfeld, T. (Eds), ISIPTA '05. SIPTA, 11–20.

- Hutter, M., Zaffalon, M. (2005). Distribution of mutual information from complete and incomplete data. Computational Statistics and Data Analysis 48(3), 633–657.

- Piatti, A., Zaffalon, M., Trojani F. (2005). Limits of learning from imperfect observations under prior ignorance: the case of the imprecise Dirichlet model. In Cozman, F. G., Nau, R., Seidenfeld,

T. (Eds), ISIPTA '05. SIPTA, 276–286.

- Zaffalon, M. (2005). Conservative rules for predictive inference with incomplete data. In Cozman, F. G., Nau, R., Seidenfeld, T. (Eds), ISIPTA '05. SIPTA, 406–415.

- Zaffalon, M. (2005). Credible classification for environmental problems. Environmental Modelling & Software 20(8), 1003–1012.

- Zaffalon, M., Hutter, M. (2005). Robust inference of trees. Annals of Mathematics and Artificial Intelligence 45(1–2), 215–239.

- Antonucci, A., Salvetti, A., Zaffalon, M. (2004). Assessing debris flow hazard by credal nets. In López-Díaz, M., Gil, M. A., Grzegorzewski, P., Hryniewicz, O., Lawry, J. (Eds), SMPS 2004, Soft Methodology and Random Information Systems, Springer , 125–132.

- Antonucci, A., Salvetti, A., Zaffalon, M. (2004). Hazard assessment of debris flows by credal networks. In Pahl-Wostl, C., Schmidt, S., Rizzoli, A. E., Jakeman, A. J. (Eds), iEMSs 2004, iEMSs 98–103.

- De Cooman, G., Zaffalon, M. (2004). Updating beliefs with incomplete observations. Artificial Intelligence 159(1–2), 75–125.

- De Cooman, G., Zaffalon, M. (2003). Updating with incomplete observations. In Kjærulff, U., Meek, C. (Eds), UAI 2003. Morgan Kaufmann, 142–150.

- Hutter, M., Zaffalon, M. (2003). Bayesian treatment of incomplete discrete data applied to mutual information and feature selection. In Günter, A., Kruse, R., Neumann, B., KI-2003. Lecture Notes in Computer Science 2821, Springer, Heidelberg, 396–406.

- Zaffalon, M., Fagiuoli, E. (2003). Tree-based credal networks for classification. Reliable computing 9(6), 487–509.

- Zaffalon, M., Wesnes, K., Petrini, O. (2003). Reliable diagnoses of dementia by the naive credal classifier inferred from incomplete cognitive data. Artificial Intelligence in Medicine 29(1–2), 61–79.

- Zaffalon, M. (2002). Exact credal treatment of missing data. Journal of Statistical Planning and Inference 105(1), 105–122.

- Zaffalon, M. (2002). The naive credal classifier. Journal of Statistical Planning and Inference 105(1), 5–21.

- Zaffalon, M., Hutter, M. (2002). Robust feature selection by mutual information distributions. In Darwiche, A., Friedman, N. (Eds), UAI 2002. Morgan Kaufmann, 577–584.

- Gambardella, L. M., Mastrolilli, M., Rizzoli, A. E., Zaffalon, M. (2001). An optimization methodology for intermodal terminal management. Journal of Intelligent Manufacturing 12, 521–534.

- Zaffalon, M. (2001). Robust discovery of tree-dependency structures. In de Cooman, G., Fine, T., Seidenfeld, T. (Eds), ISIPTA '01. Shaker Publishing, The Netherlands, 394–403.

- Zaffalon, M. (2001). Statistical inference of the naive credal classifier. In de Cooman, G., Fine, T., Seidenfeld, T. (Eds), ISIPTA '01. Shaker Publishing, The Netherlands, 384–393.

- Zaffalon, M., Wesnes, K., Petrini, O. (2001). Credal classification for dementia screening. In Quaglini, S., Barahona P., Andreassen, S. (Eds), AIME '01. Lecture Notes in Computer Science 2101, Springer, 67–76.

- Fagiuoli, E., Zaffalon, M. (2000). Tree-augmented naive credal classifiers. In IPMU 2000. Universidad Politécnica de Madrid, Spain, 1320–1327.

- Zaffalon, M. (1999). A credal approach to naive classification. In de Cooman, G., Cozman, F. G., Moral, S., Walley, P. (Eds), ISIPTA '99, The Imprecise Probabilities Project, Universiteit Gent, Belgium,

405–414.

- Fagiuoli, E., Zaffalon, M. (1998). 2U: an exact interval propagation algorithm for polytrees with binary variables. Artificial Intelligence 106(1), 77–107.

- Fagiuoli, E., Zaffalon, M. (1998). A note about redundancy in influence diagrams. International Journal of Approximate Reasoning 19(3–4), 231–246.

- Gambardella, L. M., Rizzoli, A. E., Zaffalon, M. (1998). Simulation and planning of an intermodal container terminal. Simulation 71(2), 107–116.

- Darby-Dowman, K., Little, J., Mitra, G., Zaffalon, M. (1997). Constraint logic programming and integer programming approaches and their collaboration in solving an assignment scheduling problem. Constraints 1(3), 245–264.

Edited books

- De Cooman, G., Vejnarová, J., Zaffalon, M. (Eds) (2007), ISIPTA '07, Action M Agency, Prague, Czech Republic.

- Bernard, J.-M., Seidenfeld, T., Zaffalon, M. (Eds) (2003), ISIPTA '03, Proceedings in Informatics 18, Carleton Scientific, Canada.

Edited journal issues

Zero-shot causal graph extrapolation from text via LLMs

Authors: Alessandro Antonucci, Gregorio Piqué and Marco Zaffalon.

Year: 2024.

Abstract: We evaluate the ability of large language models (LLMs) to infer causal relations from natural language. Compared to traditional natural language processing and deep learning techniques, LLMs show competitive performance in a benchmark of pairwise relations without needing (explicit) training samples. This motivates us to extend our approach to extrapolating causal graphs through iterated pairwise queries. We perform a preliminary analysis on a benchmark of biomedical abstracts with ground-truth causal graphs validated by experts. The results are promising and support the adoption of LLMs for such a crucial step in causal inference, especially in medical domains, where the amount of scientific text to analyse might be huge, and the causal statements are often implicit.

Published at XAI4Sci-24 @ AAAI 2024.

A version similar to the published paper can be downloaded.

Tractable bounding of counterfactual queries by knowledge compilation

Authors: David Huber, Yi Chen, Alessandro Antonucci, Adnan Darwiche and Marco Zaffalon.

Year: 2023.

Abstract: We discuss the problem of bounding partially identifiable queries, such as counterfactuals, in Pearlian structural causal models. A recently proposed iterated EM scheme yields an inner approximation of those bounds by sampling the initialisation parameters. Such a method requires multiple (Bayesian network) queries over models sharing the same structural equations and topology, but different exogenous probabilities. This setup makes a compilation of the underlying model to an arithmetic circuit advantageous, thus inducing a sizeable inferential speed-up. We show how a single symbolic knowledge compilation allows us to obtain the circuit structure with symbolic parameters to be replaced by their actual values when computing the different queries. We also discuss parallelisation techniques to further speed up the bound computation. Experiments against standard Bayesian network inference show clear computational advantages with up to an order of magnitude of speed-up.

Published at TPM-23 @ UAI 2023.

A version similar to the published paper can be downloaded.

Efficient computation of counterfactual bounds

Authors: Marco Zaffalon, Alessandro Antonucci, Rafael Cabañas, David Huber abd Dario Azzimonti.

Year: 2024.

Abstract: We assume to be given structural equations over discrete variables inducing a directed acyclic graph, namely, a structural causal model, together with data about its internal nodes. The question we want to answer is how we can compute bounds for partially identifiable counterfactual queries from such an input. We start by giving a map from structural casual models to credal networks. This allows us to compute exact counterfactual bounds via algorithms for credal nets on a subclass of structural causal models. Exact computation is going to be inefficient in general given that, as we show, causal inference is NP-hard even on polytrees. We target then approximate bounds via a causal EM scheme. We evaluate their accuracy by providing credible intervals on the quality of the approximation; we show through a synthetic benchmark that the EM scheme delivers accurate results in a fair number of runs. In the course of the discussion, we also point out what seems to be a neglected limitation to the trending idea that counterfactual bounds can be computed without knowledge of the structural equations. We also present a real case study on palliative care to show how our algorithms can readily be used for practical purposes.

Published in International Journal of Approximate Reasoning tbd, 109111.

A version similar to the published paper can be downloaded.

Approximating counterfactual bounds while fusing observational, biased and randomised data sources

Authors: Marco Zaffalon, Alessandro Antonucci, Rafael Cabañas and David Huber.

Year: 2023.

Abstract: We address the problem of integrating data from multiple, possibly biased, observational and interventional studies, to eventually compute counterfactuals in structural causal models. We start from the case of a single observational dataset affected by a selection bias. We show that the likelihood of the available data has no local maxima. This enables us to use the causal expectation-maximisation scheme to approximate the bounds for partially identifiable counterfactual queries, which are the focus of this paper. We then show how the same approach can address the general case of multiple datasets, no matter whether interventional or observational, biased or unbiased, by remapping it into the former one via graphical transformations. Systematic numerical experiments and a case study on palliative care show the effectiveness of our approach, while hinting at the benefits of fusing heterogeneous data sources to get informative outcomes in case of partial identifiability.

Published in International Journal of Approximate Reasoning 162, 109023.

A version similar to the published paper can be downloaded.

Solving the Allais paradox by counterfactual harm

Authors: Marco Zaffalon, Alessandro Antonucci and Oleg Szher.

Year: 2023.

Abstract: We show that by framing Allais paradox as a causal problem, without loss of generality, we can solve the paradox using the recently proposed notion of counterfactual harm.

Published in Quaeghebeur, E., Miranda, E., Montes, I., Vantaggi, B. (Eds), ISIPTA '23.

A version similar to the published paper can be downloaded.

Closure operators, classifiers and desirability

Authors: Alessio Benavoli, Alessandro Facchini and Marco Zaffalon.

Year: 2023.

Abstract: At the core of Bayesian probability theory, or dually desirability theory, lies an assumption of linearity of the scale in which rewards are measured. We revisit two recent papers that extended desirability theory to the nonlinear case by letting the utility scale be represented either by a general closure operator or by a binary general (nonlinear) classifier. By using standard results in logic, we highlight the connection between these two approaches and show that this connection allows us to extend the separating hyperplane theorem (which is at the core of the duality between Bayesian decision theory and desirability theory) to the nonlinear case.

Published in Quaeghebeur, E., Miranda, E., Montes, I., Vantaggi, B. (Eds), ISIPTA '23. PMLR 215, JMLR.org, 25–36.

A version similar to the published paper can be downloaded.

Correlated product of experts for sparse Gaussian process regression

Authors: Manuel Schürch, Dario Azzimonti, Alessio Benavoli and Marco Zaffalon.

Year: 2023.

Abstract: Gaussian processes (GPs) are an important tool in machine learning and statistics. However, off-the-shelf GP inference procedures are limited to datasets with several thousand data points because of their cubic computational complexity. For this reason, many sparse GPs techniques have been developed over the past years. In this paper, we focus on GP regression tasks and propose a new approach based on aggregating predictions from several local and correlated experts. Thereby, the degree of correlation between the experts can vary between independent up to fully correlated experts. The individual predictions of the experts are aggregated taking into account their correlation resulting in consistent uncertainty estimates. Our method recovers independent Product of Experts, sparse GP and full GP in the limiting cases. The presented framework can deal with a general kernel function and multiple variables, and has a time and space complexity which is linear in the number of experts and data samples, which makes our approach highly scalable. We demonstrate superior performance, in a time vs. accuracy sense, of our proposed method against state-of-the-art GP approximations for synthetic as well as several real-world datasets with deterministic and stochastic optimization.

Published in Machine Learning 112, 1411–1432.

A version similar to the published paper can be downloaded.

Nonlinear desirability theory

Authors: Enrique Miranda and Marco Zaffalon.

Year: 2023.

Abstract: Desirability can be understood as an extension of Anscombe and Aumann's Bayesian decision theory to sets of expected utilities. At the core of desirability lies an assumption of linearity of the scale in which rewards are measured. It is a traditional assumption used to derive the expected utility model, which clashes with a general representation of rational decision making, though. Allais has, in particular, pointed this out in 1953 with his famous paradox. We note that the utility scale plays the role of a closure operator when we regard desirability as a logical theory. This observation enables us to extend desirability to the nonlinear case by letting the utility scale be represented via a general closure operator. The new theory directly expresses rewards in actual nonlinear currency (money), much in Savage's spirit, while arguably weakening the founding assumptions to a minimum. We characterise the main properties of the new theory both from the perspective of sets of gambles and of their lower and upper prices (previsions). We show how Allais paradox finds a solution in the new theory, and discuss the role of sets of probabilities in the theory.

Published in International Journal of Approximate Reasoning 154, 176–199.

A version similar to the published paper can be downloaded.

Bounding counterfactuals under selection bias

Authors: Marco Zaffalon, Alessandro Antonucci, Rafael Cabañas, David Huber and Dario Azzimonti.

Year: 2022.

Abstract: Causal analysis may be affected by selection bias, which is defined as the systematic exclusion of data from a certain subpopulation. Previous work in this area focused on the derivation of identifiability conditions. We propose instead a first algorithm to address both identifiable and unidentifiable queries. We prove that, in spite of the missingness induced by the selection bias, the likelihood of the available data is unimodal. This enables us to use the causal expectation-maximisation scheme to obtain the values of causal queries in the identifiable case, and to compute bounds otherwise. Experiments demonstrate the approach to be practically viable. Theoretical convergence characterisations are provided.

Published in Salmerón, A., Rumí, R. (Eds), PGM 2022. PMLR 186, JMLR.org, 289–300.

A version similar to the published paper can be downloaded.

A revised and updated version of this conference paper is Approximating counterfactual bounds while fusing observational, biased and randomised data sources.

Quantum indistinguishability through exchangeability

Authors: Alessio Benavoli, Alessandro Facchini and Marco Zaffalon.

Year: 2022.

Abstract: Two particles are identical if all their intrinsic properties, such as spin and charge, are the same, meaning that no quantum experiment can distinguish them. In addition to the well known principles of quantum mechanics, understanding systems of identical particles requires a new postulate, the so called symmetrization postulate. In this work, we show that the postulate corresponds to exchangeability assessments for sets of observables (gambles) in a quantum experiment, when quantum mechanics is seen as a normative and algorithmic theory guiding an agent to assess her subjective beliefs represented as (coherent) sets of gambles. Finally, we show how sets of exchangeable observables (gambles) may be updated after a measurement and discuss the issue of defining entanglement for indistinguishable particle systems.

Published in International Journal of Approximate Reasoning 151, 389–412.

A version similar to the published paper can be downloaded.

Nonlinear desirability as a linear classification problem

Authors: Arianna Casanova, Alessio Benavoli and Marco Zaffalon.

Year: 2023.

Abstract: This paper presents an interpretation as classification problem for standard desirability and other instances of nonlinear desirability (convex coherence and positive additive coherence). In particular, we analyze different sets of rationality axioms and, for each one of them, we show that proving that a subject respects these axioms on the basis of a finite set of \emph{acceptable} and a finite set of rejectable gambles can be reformulated as a binary classification problem where the family of classifiers used changes with the axioms considered. Moreover, by borrowing ideas from machine learning, we show the possibility of defining a feature mapping, which allows us to reformulate the above nonlinear classification problems as linear ones in higher-dimensional spaces. This allows us to interpret gambles directly as payoffs vectors of monetary lotteries, as well as to provide a practical tool to check the rationality of an agent.

Published in International Journal of Approximate Reasoning 152, 1–32.

A version similar to the published paper can be downloaded.

Information algebras in the theory of imprecise probabilities, an extension

Authors: Arianna Casanova, Jürg Kohlas and Marco Zaffalon.

Year: 2022.

Abstract: In recent works, we have shown how to construct an information algebra of coherent sets of gambles, considering firstly a particular model to represent questions, called the multivariate model, and then generalizing it. Here we further extend the construction made to the highest level of generality, setting up an associated information algebra of coherent lower previsions, analyzing the connection of both the information algebras constructed with an instance of set algebras and, finally, establishing and inspecting a version of the marginal problem in this framework.

Published in International Journal of Approximate Reasoning 150, 311–336.

A version similar to the published paper can be downloaded.

Information algebras in the theory of imprecise probabilities

Authors: Arianna Casanova, Jürg Kohlas and Marco Zaffalon.

Year: 2022.

Abstract: In this paper we create a bridge between desirability and information algebras: we show how coherent sets of gambles, as well as coherent lower previsions, induce such structures. This allows us to enforce the view of such imprecise-probability objects as algebraic and logical structures; moreover, it enforces the interpretation of probability as information, and gives tools to manipulate them as such.

Published in International Journal of Approximate Reasoning 142, 383–416.

A version similar to the published paper can be downloaded.

Causal expectation-maximisation

Authors: Marco Zaffalon, Alessandro Antonucci and Rafael Cabañas.

Year: 2021.

Abstract: Structural causal models are the basic modelling unit in Pearl's causal theory; in principle they allow us to solve counterfactuals, which are at the top rung of the ladder of causation. But they often contain latent variables that limit their application to special settings. This appears to be a consequence of the fact, proven in this paper, that causal inference is NP-hard even in models characterised by polytree-shaped graphs. To deal with such a hardness, we introduce the causal EM algorithm. Its primary aim is to reconstruct the uncertainty about the latent variables from data about categorical manifest variables. Counterfactual inference is then addressed via standard algorithms for Bayesian networks. The result is a general method to approximately compute counterfactuals, be they identifiable or not (in which case we deliver bounds). We show empirically, as well as by deriving credible intervals, that the approximation we provide becomes accurate in a fair number of EM runs. These results lead us finally to argue that there appears to be an unnoticed limitation to the trending idea that counterfactual bounds can often be computed without knowledge of the structural equations.

Published at WHY-21 @ NeurIPS 2021.

A version similar to the published paper can be downloaded.

A revised and updated version of this conference paper is Efficient computation of counterfactual bounds.

The weirdness theorem and the origin of quantum paradoxes

Authors: Alessio Benavoli, Alessandro Facchini and Marco Zaffalon.

Year: 2021.

Abstract: We argue that there is a simple, unique, reason for all quantum paradoxes, and that such a reason is not uniquely related to quantum theory. It is rather a mathematical question that arises at the intersection of logic, probability, and computation. We give our 'weirdness theorem' that characterises the conditions under which the weirdness will show up. It shows that whenever logic has bounds due to the algorithmic nature of its tasks, then weirdness arises in the special form of negative probabilities or non-classical evaluation functionals. Weirdness is not logical inconsistency, however. It is only the expression of the clash between an unbounded and a bounded view of computation in logic. We discuss the implication of these results for quantum mechanics, arguing in particular that its interpretation should ultimately be computational rather than exclusively physical. We develop in addition a probabilistic theory in the real numbers that exhibits the phenomenon of entanglement, thus concretely showing that the latter is not specific to quantum mechanics.

Published in Foundations of Physics 51(5), 95.

A version similar to the published paper can be downloaded.

Time series forecasting with Gaussian processes needs priors

Authors: Giorgio Corani, Alessio Benavoli and Marco Zaffalon.

Year: 2021.

Abstract: Automatic forecasting is the task of receiving a time series and returning a forecast for the next time steps without any human intervention. Gaussian Processes (GPs) are a powerful tool for modeling time series, but so far there are no competitive approaches for automatic forecasting based on GPs. We propose practical solutions to two problems: automatic selection of the optimal kernel and reliable estimation of the hyperparameters. We propose a fixed composition of kernels, which contains the components needed to model most time series: linear trend, periodic patterns, and other flexible kernel for modeling the non-linear trend. Not all components are necessary to model each time series; during training the unnecessary components are automatically made irrelevant via automatic relevance determination (ARD). We moreover assign priors to the hyperparameters, in order to keep the inference within a plausible range; we design such priors through an empirical Bayes approach. We present results on many time series of different types; our GP model is more accurate than state-of-the-art time series models. Thanks to the priors, a single restart is enough the estimate the hyperparameters; hence the model is also fast to train.

Published in ECML PKDD 2021. Lecture notes in Computer Science 4, Springer, 103–117.

A version similar to the published paper can be downloaded.

Algebras of sets and coherent sets of gambles

Authors: Arianna Casanova, Jürg Kohlas and Marco Zaffalon.

Year: 2021.

Abstract: In a recent work we have shown how to construct an information algebra of coherent sets of gambles defined on general possibility spaces. Here we analyze the connection of such an algebra with the set algebra of sets of its atoms and the set algebra of subsets of the possibility space on which gambles are defined. Set algebras are particularly important information algebras since they are their prototypical structures. Furthermore, they are the algebraic counterparts of classical propositional logic. As a consequence, this paper also details how propositional logic is naturally embedded into the theory of imprecise probabilities.

Published in Vejnarová, J., Wilson, N. (Eds), ECSQARU 2021. Lecture Notes in Artificial Intelligence 12897, Springer, 603–615.

A version similar to the published paper can be downloaded.

A revised and updated version of this conference paper is Information algebras in the theory of imprecise probabilities, an extension.

Quantum indistinguishability through exchangeable desirable gambles

Authors: Alessio Benavoli, Alessandro Facchini and Marco Zaffalon.

Year: 2021.

Abstract: Two particles are identical if all their intrinsic properties, such as spin and charge, are the same, meaning that no quantum experiment can distinguish them. In addition to the well-known principles of quantum mechanics, understanding systems of identical particles requires a new postulate, the so-called symmetrization postulate. In this work, we show that the postulate corresponds to exchangeability assessments for sets of observables (gambles) in a quantum experiment, when quantum mechanics is seen as a normative and algorithmic theory guiding an agent to assess her subjective beliefs represented as (coherent) sets of gambles. Finally, we show how sets of exchangeable observables (gambles) may be updated after a measurement and discuss the issue of defining entanglement for indistinguishable particle systems.

Published in de Bock, J., Cano, A., Miranda, E., Moral, S. (Eds), ISIPTA '21. PMLR 147, JMLR.org, 22–31.

A version similar to the published paper can be downloaded.

A revised and updated version of this conference paper is Quantum indistinguishability through exchangeability.

Nonlinear desirability as a linear classification problem

Authors: Arianna Casanova, Alessio Benavoli and Marco Zaffalon.

Year: 2021.

Abstract: The present paper proposes a generalization of linearity axioms of coherence through a geometrical approach, which leads to an alternative interpretation of desirability as a classification problem. In particular, we analyze different sets of rationality axioms and, for each one of them, we show that proving that a subject, who provides finite accept and reject statements, respects these axioms, corresponds to solving a binary classification task using, each time, a different (usually nonlinear) family of classifiers. Moreover, by borrowing ideas from machine learning, we show the possibility to define a feature mapping allowing us to reformulate the above nonlinear classification problems as linear ones in a higher-dimensional space. This allows us to interpret gambles directly as payoffs vectors of monetary lotteries, as well as to reduce the task of proving the rationality of a subject to a linear classification task.

Published in de Bock, J., Cano, A., Miranda, E., Moral, S. (Eds), ISIPTA '21. PMLR 147, JMLR.org, 61–71.

A version similar to the published paper can be downloaded.

A revised and updated version of this conference paper is Nonlinear desirability as a linear classification problem.

Information algebras of coherent sets of gambles in general possibility spaces

Authors: Jüerg Kohlas, Arianna Casanova and Marco Zaffalon.

Year: 2021.

Abstract: The present paper proposes a generalization of linearity axioms of coherence through a geometrical approach, which leads to an alternative interpretation of desirability as a classification problem. In particular, we analyze different sets of rationality axioms and, for each one of them, we show that proving that a subject, who provides finite accept and reject statements, respects these axioms, corresponds to solving a binary classification task using, each time, a different (usually nonlinear) family of classifiers. Moreover, by borrowing ideas from machine learning, we show the possibility to define a feature mapping allowing us to reformulate the above nonlinear classification problems as linear ones in a higher-dimensional space. This allows us to interpret gambles directly as payoffs vectors of monetary lotteries, as well as to reduce the task of proving the rationality of a subject to a linear classification task.

Published in de Bock, J., Cano, A., Miranda, E., Moral, S. (Eds), ISIPTA '21. PMLR 147, JMLR.org, 191–200.

A version similar to the published paper can be downloaded.

A revised and updated version of this conference paper is Information algebras in the theory of imprecise probabilities, an extension.

The sure thing

Authors: Marco Zaffalon and Enrique Miranda.

Year: 2021.

Abstract: If we prefer action a to b both under an event and under its complement, then we should just prefer a to b. This is Savage's sure-thing principle. In spite of its intuitive- and simple-looking nature, for which it gets almost immediate acceptance, the sure thing is not a logical principle. So where does it get its support from? In fact, the sure thing may actually fail. This is related to a variety of deep and foundational concepts in causality, decision theory, and probability, as well as to Simpons' paradox and Blyth's game. In this paper we try to systematically clarify such a network of relations. Then we propose a general desirability theory for non-linear utility scales. We use that to show that the sure thing is primitive to many of the previous concepts: in non-causal settings, the sure thing follows from considerations of temporal coherence and coincides with conglomerability; it can be understood as a rationality axiom to enable well-behaved conditioning in logic. In causal settings, it can be derived using only coherence and a causal independence condition.

Published in de Bock, J., Cano, A., Miranda, E., Moral, S. (Eds), ISIPTA '21. PMLR 147, JMLR.org, 342–351.

A version similar to the published paper can be downloaded.

Joint desirability foundations of social choice and opinion pooling

Authors: Arianna Casanova, Enrique Miranda and Marco Zaffalon.

Year: 2021.

Abstract: We develop joint foundations for the fields of social choice and opinion pooling using coherent sets of desirable gambles, a general uncertainty model that allows to encompass both complete and incomplete preferences. This leads on the one hand to a new perspective of traditional results of social choice (in particular Arrow's theorem as well as sufficient conditions for the existence of an oligarchy and democracy) and on the other hand to using the same framework to analyse opinion pooling. In particular, we argue that weak Pareto (unanimity) should be given the status of a rationality requirement and use this to discuss the aggregation of experts' opinions based on probability and (state-independent) utility, showing some inherent limitation of this framework, with implications for statistics. The connection between our results and earlier work in the literature is also discussed.

Published in Annals of Mathematics and Artificial Intelligence 89(10–11), 965–1011..

A version similar to the published paper can be downloaded.

Impact on place of death in cancer patients: a causal exploration in southern Switzerland

Authors: Heidi Kern, Giorgio Corani, David Huber, Nicola Vermes, Marco Zaffalon, Marco Varini, Claudia Wenzel and André Fringer.

Year: 2020.

Abstract: Most terminally ill cancer patients prefer to die at home, but a majority die in institutional settings. Research questions about this discrepancy have not been fully answered. This study applies artificial intelligence and machine learning techniques to explore the complex network of factors and the cause-effect relationships affecting the place of death, with the ultimate aim of developing policies favouring home-based end-of-life care.

Published in BMC Palliative Care 19, 160.

The published paper can be downloaded.

CREDICI: a Java library for causal inference by credal networks

Authors: Rafael Cabañas, Alessandro Antonucci, David Huber and Marco Zaffalon.

Year: 2020.

Abstract: We present CREDICI, a Java open-source tool for causal inference based on credal networks. Credal networks are an extension of Bayesian networks where local probability mass functions are only constrained to belong to given, so-called credal, sets. CREDICI is based on the recent work of Zaffalon et al. (2020), where an equivalence between Pearl's structural causal models and credal networks has been derived. This allows to reduce a counterfactual query in a causal model to a standard query in a credal network, even in the case of unidentifiable causal effects. The necessary transformations and data structures are implemented in CREDICI, while inferences are eventually computed by CREMA (Huber et al., 2020), a twin library for general credal network inference. Here we discuss the main implementation challenges and possible outlooks.

Published in Jaeger, M., Nielsen, T. D. (Eds), PGM 2020. PMLR 138, JMLR.org, 597–600.

A version similar to the published paper can be downloaded.

CREMA: a Java library for credal network inference

Authors: David Huber, Rafael Cabañas, Alessandro Antonucci and Marco Zaffalon.

Year: 2020.

Abstract: We present CREMA (credal models algorithms), a Java library for inference in credal networks. These models are analogous to Bayesian networks, but their local parameters are only constrained to vary in, so-called credal, sets. Inference in credal networks is intended as the computation of the bounds of a query with respect to those local variations. For credal networks the task is harder than in Bayesian networks, being NPPP-hard in general models. Yet, scalable approximate algorithms have been shown to provide good accuracies on large or dense models, while exact techniques can be designed to process small or sparse models. CREMA embeds these algorithms and also offers an API to build and query credal networks together with a specification format. This makes CREMA, whose features are discussed and described by a simple example, the most advanced tool for credal network modelling and inference developed so far.

Published in Jaeger, M., Nielsen, T. D. (Eds), PGM 2020. PMLR 138, JMLR.org, 613–616.

A version similar to the published paper can be downloaded.

Structural causal models are (solvable by) credal networks

Authors: Marco Zaffalon, Alessandro Antonucci and Rafael Cabañas.

Year: 2020.

Abstract: A structural causal model is made of endogenous (manifest) and exogenous (latent) variables. We show that endogenous observations induce linear constraints on the probabilities of the exogenous variables. This allows to exactly map a causal model into a credal network. Causal inferences, such as interventions and counterfactuals, can consequently be obtained by standard algorithms for the updating of credal nets. These natively return sharp values in the identifiable case, while intervals corresponding to the exact bounds are produced for unidentifiable queries. A characterization of the causal models that allows the map above to be compactly derived is given, along with a discussion about the scalability for general models. This contribution should be regarded as a systematic approach to represent structural causal models by credal networks and hence to systematically compute causal inferences. A number of demonstrative examples is presented to clarify our methodology. Extensive experiments show that approximate algorithms for credal networks can immediately be used to do causal inference in real-size problems.

Published in Jaeger, M., Nielsen, T. D. (Eds), PGM 2020. PMLR 138, JMLR.org, 581–592.

A version similar to the published paper can be downloaded.

Recursive estimation for sparse Gaussian process regression

Authors: Manuel Schürch, Dario Azzimonti, Alessio Benavoli and Marco Zaffalon.

Year: 2020.

Abstract: Gaussian Processes (GPs) are powerful kernelized methods for non-parameteric regression used in many applications. However, their use is limited to a few thousand of training samples due to their cubic time complexity. In order to scale GPs to larger datasets, several sparse approximations based on so-called inducing points have been proposed in the literature. In this work we investigate the connection between a general class of sparse inducing point GP regression methods and Bayesian recursive estimation which enables Kalman filter like updating for online learning. The majority of previous work has focused on the batch setting, in particular for learning the model parameters and the position of the inducing points, here instead we focus on training with mini-batches. By exploiting the Kalman filter formulation, we propose a novel approach that estimates such parameters by recursively propagating the analytical gradients of the posterior over mini-batches of the data. Compared to state-of-the-art methods, our method keeps analytic updates for the mean and covariance of the posterior, thus reducing drastically the size of the optimization problem. We show that our method achieves faster convergence and superior performance compared to state of the art sequential Gaussian Process regression on synthetic GP as well as real-world data with up to a million of data samples.

Published in Automatica 120, 109127.

A version similar to the published paper can be downloaded.

Probabilistic reconciliation of hierarchical forecast via Bayes' rule

Authors: Giorgio Corani, Dario Azzimoni, João Augusto and Marco Zaffalon.

Year: 2020.

Abstract: We present a novel approach for reconciling hierarchical forecasts, based on Bayes' rule. We define a prior distribution for the bottom time series of the hierarchy, based on the bottom base forecasts. Then we update their distribution via Bayes' rule, based on the base forecasts for the upper time series. Under the Gaussian assumption, we derive the updating in closed-form. We derive two algorithms, which differ as for the assumed independencies. We discuss their relation with the MinT reconciliation algorithm and with the Kalman filter, and we compare them experimentally.

Published in Hutter, F., Kersting, K., Lijffijt, J., Valera, I. (Eds), ECML PKDD 2020. Lecture notes in Computer Science 12459. Springer, 211–226.

A version similar to the published paper can be downloaded.

Compatibility, desirability, and the running intersection property

Authors: Enrique Miranda and Marco Zaffalon.

Year: 2020.

Abstract: Compatibility is the problem of checking whether some given probabilistic assessments have a common joint probabilistic model. When the assessments are unconditional, the problem is well established in the literature and finds a solution through the running intersection property (RIP). This is not the case of conditional assessments. In this paper, we study the compatibility problem in a very general setting: any possibility space, unrestricted domains, imprecise (and possibly degenerate) probabilities. We extend the unconditional case to our setting, thus generalising most of previous results in the literature. The conditional case turns out to be fundamentally different from the unconditional one. For such a case, we prove that the problem can still be solved in general by RIP but in a more involved way: by constructing a junction tree and propagating information over it. Still, RIP does not allow us to optimally take advantage of sparsity: in fact, conditional compatibility can be simplified further by joining junction trees with coherence graphs.

Published in Artificial Intelligence 283, 103274.

A version similar to the published paper can be downloaded.

Social pooling of beliefs and values with desirability

Authors: Arianna Casanova, Enrique Miranda and Marco Zaffalon.

Year: 2020.

Abstract: The problem of aggregating beliefs and values of rational subjects is treated with the formalism of sets of desirable gambles. This leads on the one hand to a new perspective of traditional results of social choice (in particular Arrow's theorem as well as sufficient conditions for the existence of an oligarchy and democracy) and on the other hand to use the same framework to create connections with opinion pooling. In particular, we show that weak Pareto can be derived as a coherence requirement and discuss the aggregation of state independent beliefs.

Published in FLAIRS-33, AAAI, 569–574.

A version similar to the published paper can be downloaded.

A revised and updated version of this conference paper is Joint desirability foundations of social choice and opinion pooling.

Sampling subgraphs with guaranteed treewidth for accurate and efficient graphical inference

Authors: Jaemin Yoo, U Kang, Mauro Scanagatta, Giorgio Corani and Marco Zaffalon.

Year: 2020.

Abstract: How can we run graphical inference on large graphs efficiently and accurately? Many real-world networks are modeled as graphical models, and graphical inference is fundamental to understand the properties of those networks. In this work, we propose a novel approach for fast and accurate inference, which first samples a small sub-graph and then runs inference over the subgraph instead of the given graph. This is done by the bounded tree-width (BTW) sampling, our novel algorithm that generates a subgraph with guaranteed bounded tree-width while retaining as many edges as possible. We first analyze the properties of BTW theoretically. Then, we evaluate our approach on node classification and compare it with the baseline which is to run loopy belief propagation (LBP) on the original graph. Our approach can be coupled with various inference algorithms: it shows higher accuracy up to 13.7% with the junction tree algorithm, and allows faster inference up to 23.8 times with LBP. We further compare BTW with previous graph sampling algorithms and show that it gives the best accuracy.

Published in Caverlee, J., Hu, X., Lalmas, M., Wang, W. (Eds), WSDM '20. ACM, 708–716.

A version similar to the published paper can be downloaded.

Bernstein's socks, polynomial-time provable coherence and entanglement

Authors: Alessio Benavoli, Alessandro Facchini and Marco Zaffalon.

Year: 2019.

Abstract: We recently introduced a bounded rationality approach for the theory of desirable gambles. It is based on the unique requirement that being nonnegative for a gamble has to be defined so that it can be provable in polynomial time. In this paper we continue to investigate properties of this class of models. In particular we verify that the space of Bernstein polynomials in which nonnegativity is specified by the Krivine-Vasilescu certificate is yet another instance of this theory. As a consequence, we show how it is possible to construct in it a thought experiment uncovering entanglement with classical (hence non quantum) coins.

Published in de Bock, J., de Campos, C., de Cooman, G., Quaeghebeur, E, Wheeler, G. (Eds), ISIPTA '19. PMLR 103, JMLR.org, 23–31.

A version similar to the published paper can be downloaded.

Hierarchical estimation of parameters in Bayesian networks

Authors: Laura Azzimonti, Giorgio Corani and Marco Zaffalon.

Year: 2019.

Abstract: A novel approach for parameter estimation in Bayesian networks is presented. The main idea is to introduce a hyper-prior in the Multinomial-Dirichlet model, traditionally used for conditional distribution estimation in Bayesian networks. The resulting hierarchical model jointly estimates different conditional distributions belonging to the same conditional probability table, thus borrowing statistical strength from each other. An analytical study of the dependence structure a priori induced by the hierarchical model is performed and an ad hoc variational algorithm for fast and accurate inference is derived. The proposed hierarchical model yields a major performance improvement in classification with Bayesian networks compared to traditional models. The proposed variational algorithm reduces by two orders of magnitude the computational time, with the same accuracy in parameter estimation, compared to traditional MCMC methods. Moreover, motivated by a real case study, the hierarchical model is applied to the estimation of Bayesian networks parameters by borrowing strength from related domains.

Published in Computational Statistics and Data Analysis 137, 67–91.

A version similar to the published paper can be downloaded.

Sum-of-squares for bounded rationality

Authors: Alessio Benavoli, Alessandro Facchini, Dario Piga and Marco Zaffalon.

Year: 2019.

Abstract: In the gambling foundation of probability theory, rationality requires that a subject should always (never) find desirable all nonnegative (negative) gambles, because no matter the result of the experiment the subject never (always) decreases her money. Evaluating the nonnegativity of a gamble in infinite spaces is a difficult task. In fact, even if we restrict the gambles to be polynomials in ℝn, the problem of determining nonnegativity is NP-hard. The aim of this paper is to develop a computable theory of desirable gambles. Instead of requiring the subject to desire all nonnegative gambles, we only require her to desire gambles for which she can efficiently determine the nonnegativity (in particular sum-of-squares polynomials). We refer to this new criterion as bounded rationality.

Published in International Journal of Approximate Reasoning 105, 130–152.

A version similar to the published paper can be downloaded.

Desirability foundations of robust rational decision making

Authors: Marco Zaffalon and Enrique Miranda.

Year: 2021 (published online in 2018).

Abstract: Recent work has formally linked the traditional axiomatisation of incomplete preferences à la Anscombe-Aumann with the theory of desirability developed in the context of imprecise probability, by showing in particular that they are the very same theory. The equivalence has been established under the constraint that the set of possible prizes is finite. In this paper, we relax such a constraint, thus de facto creating one of the most general theories of rationality and decision making available today. We provide the theory with a sound interpretation and with basic notions, and results, for the separation of beliefs and values, and for the case of complete preferences. Moreover, we discuss the role of conglomerability for the presented theory, arguing that it should be a rationality requirement under very broad conditions.

Published in Synthese 198(27), S6529–S6570.

A version similar to the published paper can be downloaded.

Compatibility, coherence and the RIP

Authors: Enrique Miranda and Marco Zaffalon.

Year: 2019.

Abstract: We generalise the classical result on the compatibility of marginal, possible non-disjoint, assessments in terms of the running intersection property to the imprecise case, where our beliefs are modelled in terms of sets of desirable gambles. We consider the case where we have unconditional and conditional assessments, and show that the problem can be simplified via a tree decomposition.

Published in Destercke, S., Denoeux, T., Gil, M. A., Grzegorzewski, P., Hryniewicz, O. (Eds), SMPS 2018, Uncertainty Modelling in Data Science, Advances in Intelligent and Soft Computing 832, Springer, 166–174.

A version similar to the published paper can be downloaded.

Entropy-based pruning for learning Bayesian networks using BIC

Authors: Cassio P. de Campos, Mauro Scanagatta, Giorgio Corani and Marco Zaffalon.

Year: 2018.

Abstract: For decomposable score-based structure learning of Bayesian networks, existing approaches first compute a collection of candidate parent sets for each variable and then optimize over this collection by choosing one parent set for each variable without creating directed cycles while maximizing the total score. We target the task of constructing the collection of candidate parent sets when the score of choice is the Bayesian Information Criterion (BIC). We provide new non-trivial results that can be used to prune the search space of candidate parent sets of each node. We analyze how these new results relate to previous ideas in the literature both theoretically and empirically. We show in experiments with UCI data sets that gains can be significant. Since the new pruning rules are easy to implement and have low computational costs, they can be promptly integrated into all state-of-the-art methods for structure learning of Bayesian networks.

Published in Artificial Intelligence 260, 42–50.

A version similar to the published paper can be downloaded.

Approximate structure learning for large Bayesian networks

Authors: Mauro Scanagatta, Giorgio Corani, Cassio P. de Campos and Marco Zaffalon.

Year: 2018.