|

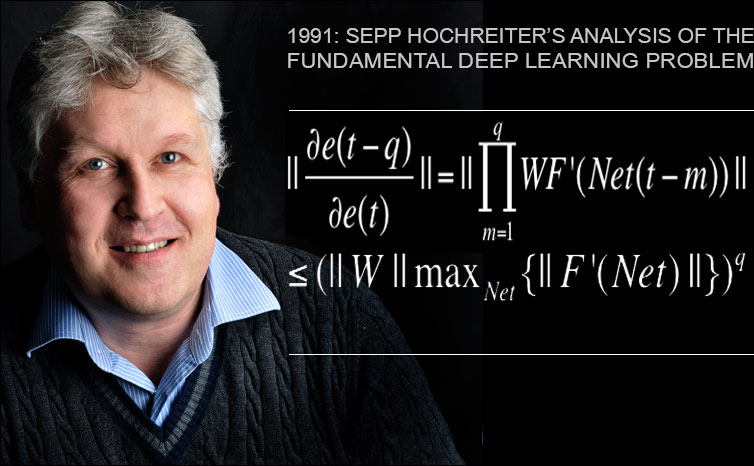

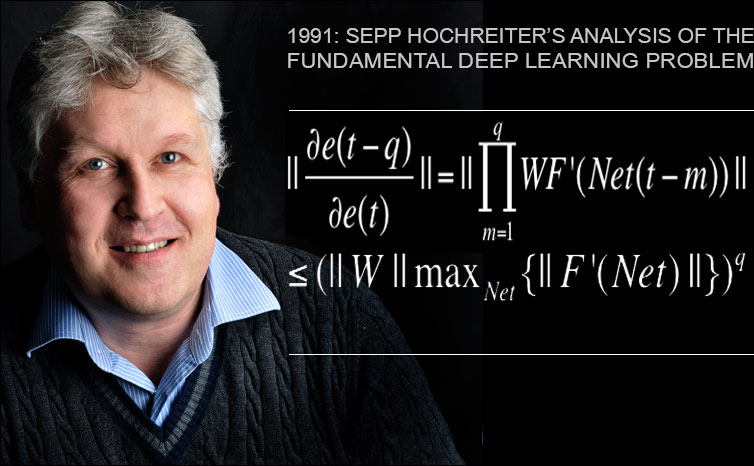

Sepp Hochreiter's Fundamental Deep Learning Problem (1991)

Jürgen Schmidhuber, 2013

Two decades later everybody is talking about Deep Learning! A first milestone of Deep Learning research was the 1991 diploma thesis of Sepp Hochreiter [1], my very first student, who is now a professor in Linz. His work formally showed that deep neural networks are hard to train, because they suffer from the now famous problem of vanishing or exploding gradients: in typical deep or recurrent networks, back-propagated error signals [3a,3] either shrink rapidly, or grow out of bounds [1]. In fact, they decay exponentially in the number of layers, or they explode. All our subsequent Deep Learning research of the 1990s and 2000s was motivated by this insight.

The thesis is in German, but don't worry, all basic results are documented in the universal language of mathematics [1]. (Google Translate does a reasonable job on it.)

Ten years later, an additional survey came out in English [2].

We have found four ways of partially overcoming the Fundamental Deep Learning Problem:

I. My first Deep Learner of 1991 overcame it through unsupervised pre-training for a hierarchy of (recurrent) neural networks [4]. This greatly facilitated subsequent supervised credit assignment through back-propagation.

II. LSTM-like networks (e.g., [5]) avoid the problem through special architecture unaffected by it.

III. Today, a million times faster GPU-based computers allow for propagating errors a few layers further down within reasonable time, even in traditional NN - that's basically what's winning many of the image competitions now, e.g., [6]. (Although this does not really overcome the problem in a fundamental way.)

IV.

The space of NN weight matrices can also be searched without relying on error gradients,

thus avoiding the Fundamental Deep Learning Problem altogether.

In fact, some simple problems requiring credit assignment

across many successive nonlinear operations

can better be solved by randomly guessing weights until a solution is found [7].

Other problems are better solved by using

universal search for weight matrix-computing programs written in

a universal programming language [8].

Some are better solved by using linear methods

to obtain optimal weights for connections to output events,

and evolving weights of connections to other events -

this is called Evolino [9].

References

[1] Sepp Hochreiter. Untersuchungen zu dynamischen neuronalen Netzen. Diploma thesis, TU Munich, 1991.

PDF. (Alternative PDFs can be found here - scroll all the way down).

[2] Sepp Hochreiter, Yoshua Bengio, Paolo Frasconi, Juergen Schmidhuber. Gradient flow in recurrent nets: the difficulty of learning long-term dependencies. In S. C. Kremer and J. F. Kolen, eds., A Field Guide to Dynamical Recurrent Neural Networks. IEEE press, 2001.

PDF.

[3] Paul J. Werbos. Applications of advances in nonlinear sensitivity analysis. In R. Drenick, F. Kozin, (eds): System Modeling and Optimization: Proc. IFIP (1981), Springer, 1982.

[3a] S. Linnainmaa. The representation of the cumulative rounding error of an algorithm as a Taylor expansion of the local rounding errors. Master's Thesis (in Finnish), Univ. Helsinki, 1970. See chapters 6-7 and FORTRAN code on pages 58-60.

[4]

J. Schmidhuber. My first Deep Learner of 1991 + Deep Learning timeline 1962-2013

[5] 1990s: Deep Learner based on LSTM RNN - first international

pattern recognition competitions won in 2009

[6] 2011: Superhuman Visual Pattern Recognition through GPU-based deep feedforward NN

[7]

J. Schmidhuber and S. Hochreiter.

Guessing can outperform many long time lag algorithms.

Technical Note IDSIA-19-96, 1996.

[8]

J. Schmidhuber.

Discovering neural nets with low Kolmogorov complexity

and high generalization capability.

Neural Networks, 10(5):857-873, 1997.

PDF

.

[9]

J. Schmidhuber, D. Wierstra, M. Gagliolo, F. Gomez.

Training Recurrent Networks by Evolino.

Neural Computation, 19(3): 757-779, 2007.

PDF (preprint).

Compare Evolino overview (since IJCAI 2005).

.

|