|

Formal Theory of Creativity & Fun & Intrinsic Motivation (1990-2010) Since 1990 JS has built curious, creative agents that may be viewed as simple artificial scientists & artists with an intrinsic desire to explore the world by continually inventing new experiments. They never stop generating novel & surprising stuff, and consist of two learning modules: (A) an adaptive predictor or compressor or model of the growing data history as the agent is interacting with its environment, and (B) a general reinforcement learner (RL) selecting the actions that shape the history. The learning progress of (A) can be precisely measured and is the agent's fun: the intrinsic reward of (B). That is, (B) is motivated to learn to invent interesting things that (A) does not yet know but can easily learn. To maximize future expected reward, in the absence of external reward such as food, (B) learns more and more complex behaviors that yield initially surprising (but eventually boring) novel patterns that make (A) quickly improve. Many papers on this since 1990 can be found here - key papers include those of 1991, 1995, 1997(2002), 2006, 2007, 2011 (see also bottom of this page). The agents embody a simple, but general, formal theory of fun & creativity explaining essential aspects of human or non-human intelligence, including selective attention, science, art, music, humor (as discussed in the next column). More Formally. Let O(t) denote the state of some subjective observer O at time t. Let H(t) denote its history of previous actions & sensations & rewards until time t. O has some adaptive method for compressing H(t) or parts of it. We identify the subjective momentary simplicity or compressibility or regularity or beauty B(D,O(t)) of any data D (but not its interestingness or aesthetic / artistic value - see below) as the negative number of bits required to encode D, given the observer's current limited prior knowledge and limited compression method. We define the time-dependent subjective interestingness or novelty or surprise or aesthetic reward or aesthetic value or internal joy or fun I(D,O(t)) of data D for observer O at discrete time step t>0 by I(D,O(t))= B(D,O(t))-B(D,O(t-1)), the change or the first derivative of subjective simplicity or beauty: as the learning agent improves its compression algorithm, formerly apparently random data parts become subjectively more regular and beautiful, requiring fewer and fewer bits for their encoding. As long as this process is not over the data remains interesting, but eventually it becomes boring even if it is beautiful. At time t, let ri(t)=I(H(t),O(t)) denote the momentary fun or intrinsic reward for compression progress through discovery of a novel pattern somewhere in H(t), the history of actions and sensations until t. Let re(t) denote the current external reward if there is any, and r(t)=g(ri(t),re(t)) the total current reward, where g is a function weighing external vs intrinsic rewards, e.g., g(a,b)=a+b. The agent's goal at time t0 is to maximize E[∑Tt=t0r(t)], where E is the expectation operator, and T is death. This can be done with one of our reinforcement learning algorithms. Implementations. The following variants were implemented. [1991a]: Non-traditional RL (without restrictive Markovian assumptions) based on adaptive recurrent neural networks as predictive world models maximizes intrinsic reward as measured by prediction error; [1991b]: Traditional RL maximizes intrinsic reward as measured by improvements in prediction error; [1995]: Traditional RL maximizes intrinsic reward as measured by relative entropies between the agent's priors and posteriors; [1997-2002]: Learning of probabilistic, hierarchical programs and skills through zero-sum intrinsic reward games of two players betting against each other, each trying to out-predict or surprise the other by inventing algorithmic experiments where both modules disagree on the predicted experimental outcome, taking into account the computational costs of learning, and learning when to learn and what to learn. The papers 1991-2002 also showed experimentally how intrinsic rewards can substantially accelerate goal-directed learning and external reward intake. JS also described mathematically optimal (more), intrinsically motivated systems driven by prediction progress or compression progress [2006-]. All publications on this are listed here. Developmental Robotics. Our continually learning artificial agents go through developmental stages: something interesting catches their attention for a while, but they get bored once they can't further improve their predictions, then try to create new tasks for themselves to learn novel, more complex skills on top of what they already know; then this gets boring as well, and so on, in open-ended fashion. Selected Videos and Invited Talks on Artificial Creativity etc 13 June 2012: JS' work featured in Through the Wormhole with Morgan Freeman on the Science Channel. Full video at youtube - check 31:20 ff and 2:30 ff. 20 Jan 2012: TEDx Talk (uploaded 10 March) at TEDx Lausanne: When creative machines overtake man (12:47). 15 Jan 2011: Winter Intelligence Conference, Oxford (on universal AI and theory of fun). See video of Sept 2011 at vimeo or youtube. 22 Sep 2010: Banquet Talk in the historic Palau de la Música Catalana for Joint Conferences ECML / PKDD 2010, Barcelona: Formal Theory of Fun & Creativity. 4th slide. On Dec 14, videolectures.net posted a video and all slides of this talk. 12 Nov 2009: Keynote in the historic Cinema Corso (Lugano) for Multiple Ways to Design Research 09: Art & Science 3 Oct 2009: Invited talk for Singularity Summit in the historic Kaufmann Concert Hall, New York City. 10 min video. 12 Jul 2009: Dagstuhl Castle Seminar on Computational Creativity 3 Sep 2008: Keynote for KES 2008 2 Oct 2007: Joint invited lecture for Algorithmic Learning Theory (ALT 2007) and Discovery Science (DS 2007), Sendai, Japan 23 Aug 2007: Keynote for A*STAR Meeting on Expectation & Surprise, Singapore 12 July 2007: Keynote for Art Meets Science 2007: "Randomness vs simplicity & beauty in physics and the fine arts" |

|

Left: JS giving a talk on creativity theory & art & science & humor at the Singularity Summit 2009 in New York City. Videos: 10min (excerpts at YouTube), 40min (original at Vimeo), 20min. JS' theory was also subject of a TV documentary (BR "Faszination Wissen", 29 May 2008; several repeats on other channels). Compare H+ interview and slashdot article. |

|

How the Theory Explains Art. Artists (and observers of art) get rewarded for making (and observing) novel patterns: data that is neither arbitrary (like incompressible random white noise) nor regular in an already known way, but regular in way that is new with respect to the observer's current knowledge, yet learnable (that is, after learning fewer computational resources are needed to encode the data). While the formal theory of creativity explains the desire to create or observe all kinds of art, Low-Complexity Art (1997) applies and illustrates it in a particularly clear way. Example to the right: Many observers report they derive pleasure from discovering simple but novel patterns while actively scanning this self-similar Femme Fractale. The observer's learning process causes a reduction of the subjective complexity of the data, yielding a temporarily high derivative of subjective beauty: a temporarily steep learning curve. Similarly, the computer-aided artist got reward for discovering a satisfactory way of using fractal circles to create this low-complexity artwork, although it took him a long time and thousands of frustrating trials. Here is the explanation of the artwork's low algorithmic complexity: The frame is a circle; its leftmost point is the center of another circle of the same size. Wherever two circles of equal size touch or intersect are centers of two more circles with equal and half size, respectively. Each line of the drawing is a segment of some circle, its endpoints are where circles touch or intersect. There are few big circles and many small ones. This can be used to encode the image very efficiently through a very short program. That is, the Femme Fractale has very low algorithmic information or Kolmogorov complexity. Click at the image to enlarge it. (The expression Femme Fractale was coined in 1997: J. Schmidhuber. Femmes Fractales. Report IDSIA-99-97, IDSIA, Switzerland, 1997. In 2012, the artwork first appeared on TV in Through the Wormhole with Morgan Freeman on the Science Channel.)

Key Papers Since 1990 (more here) J. Schmidhuber. A possibility for implementing curiosity and boredom in model-building neural controllers. In J. A. Meyer and S. W. Wilson, editors, Proc. of the International Conference on Simulation of Adaptive Behavior: From Animals to Animats, pages 222-227. MIT Press/Bradford Books, 1991. PDF. HTML. (Based on TR FKI-126-90, TUM, 1990. PDF.) J. Schmidhuber. Curious model-building control systems. In Proc. International Joint Conference on Neural Networks, Singapore, volume 2, pages 1458-1463. IEEE, 1991. PDF. HTML. J. Storck, S. Hochreiter, and J. Schmidhuber. Reinforcement-driven information acquisition in non-deterministic environments. In Proc. ICANN'95, vol. 2, pages 159-164. EC2 & CIE, Paris, 1995. PDF. HTML. J. Schmidhuber. Low-Complexity Art. Leonardo, Journal of the International Society for the Arts, Sciences, and Technology, 30(2):97-103, MIT Press, 1997. PDF. HTML. J . Schmidhuber. Artificial Curiosity Based on Discovering Novel Algorithmic Predictability Through Coevolution. In P. Angeline, Z. Michalewicz, M. Schoenauer, X. Yao, Z. Zalzala, eds., Congress on Evolutionary Computation, p. 1612-1618, IEEE Press, Piscataway, NJ, 1999. (Based on: What's interesting? TR IDSIA-35-97, 1997. ) J. Schmidhuber. Exploring the Predictable. In Ghosh, S. Tsutsui, eds., Advances in Evolutionary Computing, p. 579-612, Springer, 2002. PDF. HTML. J. Schmidhuber. Developmental Robotics, Optimal Artificial Curiosity, Creativity, Music, and the Fine Arts. Connection Science, 18(2): 173-187, 2006. PDF. J. Schmidhuber. Simple Algorithmic Principles of Discovery, Subjective Beauty, Selective Attention, Curiosity & Creativity. In V. Corruble, M. Takeda, E. Suzuki, eds., Proc. 10th Intl. Conf. on Discovery Science (DS 2007) p. 26-38, LNAI 4755, Springer, 2007. PDF.

J. Schmidhuber.

POWERPLAY: Training an Increasingly General Problem Solver by Continually Searching for the Simplest Still Unsolvable Problem.

Frontiers in Cognitive Science, 2013.

ArXiv preprint (2011):

arXiv:1112.5309 [cs.AI]

Compare:

R. K. Srivastava, B. R. Steunebrink, J. Schmidhuber.

First Experiments with PowerPlay.

Neural Networks, 2013.

ArXiv preprint (2012):

arXiv:1210.8385 [cs.AI].

|

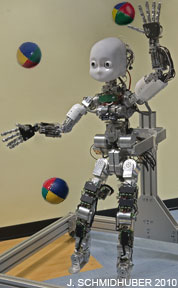

How the Theory Explains Fun Through Learning Motor Skills.

In many ways the laughs provoked by witty jokes

are similar to those provoked by the

acquisition of new skills through both babies and adults.

Past the age of 25

JS learnt to juggle three balls. It was not a sudden

process but an incremental and rewarding one: in the beginning

he managed to juggle them for maybe one second

before they fell down, then two seconds, four

seconds, etc., until he was able to do it right.

Watching himself in the mirror (as recommended by juggling teachers)

he noticed an idiotic grin across his face whenever he made progress.

Later his little daughter grinned just

like that when she was able to stand on her own feet for the

first time.

All of this fits the theory perfectly:

such grins are

triggered by intrinsic reward

for generating a data stream with previously unknown

novel patterns, such as the sensory input sequence

corresponding to observing oneself juggling, which may be quite

different from the more familiar experience of

observing somebody else juggling, and therefore

truly novel and intrinsically rewarding, until

the

adaptive predictor / compressor

(e.g., a recurrent neural network)

gets used to it.

Picture: iCub baby robot

as used in JS'

EU project IM-CLEVER on developmental robotics and on

implementing the theory of fun & creativity

on robots. (So far, however, the iCub has been unable

to juggle the three balls for more than 60 seconds -

much remains to be done.)

All of this fits the theory perfectly:

such grins are

triggered by intrinsic reward

for generating a data stream with previously unknown

novel patterns, such as the sensory input sequence

corresponding to observing oneself juggling, which may be quite

different from the more familiar experience of

observing somebody else juggling, and therefore

truly novel and intrinsically rewarding, until

the

adaptive predictor / compressor

(e.g., a recurrent neural network)

gets used to it.

Picture: iCub baby robot

as used in JS'

EU project IM-CLEVER on developmental robotics and on

implementing the theory of fun & creativity

on robots. (So far, however, the iCub has been unable

to juggle the three balls for more than 60 seconds -

much remains to be done.)

How the Theory Generalizes Active Learning (e.g., Fedorov, 1972). To optimize a function may require expensive data evaluations. Original active learning is limited to supervised classification tasks, asking which data points to evaluate next to maximize information gain, typically (but not necessarily) using 1 step look-ahead, assuming all data point evaluations are equally costly. The objective (to improve classification error) is given externally; there is no explicit intrinsic reward in the sense discussed here. The more general framework of creativity theory also takes formally into account: (1) Reinforcement learning agents embedded in an environment where there may be arbitrary delays between experimental actions and corresponding information gains, e.g., papers of 1991 & 1995, (2) The highly environment- dependent costs of obtaining or creating not just individual data points but data sequences of a priori unknown size, (3) Arbitrary algorithmic or statistical dependencies in sequences of actions & sensory inputs, e.g., papers of 2002 & 2006, (4) The computational cost of learning new skills, e.g., the 2002 paper here. Unlike previous approaches, these systems measure and maximize algorithmic novelty (learnable but previously unknown compressibility or predictability) of self-made, general, spatio- temporal patterns in the history of data and actions, e.g., papers 2006-2010.

No Objective Ideal Ratio Between Expected and Unexpected. Some of the previous attempts at explaining aesthetic experience in the context of math and information theory (Birkhoff 1933, Moles 1968, Bense 1969, Frank 1964, Nake 1974, Franke 1979) focused on the idea of an "ideal" ratio between expected and unexpected information conveyed by some aesthetic object (its order vs its complexity). Note that the alternative approach of JS does not have to postulate an objective ideal ratio of this kind. Instead his dynamic measure of interestingness reflects the change in the number of bits required to encode an object, and explicitly takes into account the subjective observer's prior knowledge as well as its limited compression improvement algorithm. Hence the value of an aesthetic experience is not defined by the observed object per se, but by the algorithmic compression progress of the subjective, learning observer.

Summary. To build a creative system we need just a few crucial ingredients: (1) A predictor or compressor (e.g., an RNN) of the continually growing history of actions and sensory inputs, reflecting what's currently known about how the world works, (2) A learning algorithm that continually improves the predictor or compressor (detecting novel spatio-temporal patterns that subsequently become known patterns), (3) Intrinsic rewards measuring the predictor's or compressor's improvements (= first derivatives of compressibility) due to the learning algorithm, (4) A separate reward optimizer or reinforcement learner (could be an evolutionary algorithm), which translates those rewards into action sequences or behaviors expected to optimize future reward - the creative agent is intrinsically motivated to make additional novel patterns predictable or compressible in hitherto unknown ways, thus maximizing learning progress of the predictor / compressor.

Alternative Summary. Apart from external reward, how much fun can a subjective observer extract from some sequence of actions and observations? His intrinsic fun is the difference between how many resources (bits & time) he needs to encode the data before and after learning. A separate reinforcement learner maximizes expected fun by finding or creating data that is better compressible in some yet unknown but learnable way, such as jokes, songs, paintings, or scientific observations obeying novel, unpublished laws.

Copyright notice (2010): Text and graphics and Fibonacci web design by Jürgen Schmidhuber, Member of the European Academy of Sciences and Arts, 2010. JS will be delighted if you use parts of the data and graphics in this web page for educational and non-commercial purposes, including articles for Wikipedia and similar sites, provided you mention the source and provide a link.

Overview Papers Etc Since 2009

J. Schmidhuber. Driven by Compression Progress: A Simple Principle Explains Essential Aspects of Subjective Beauty, Novelty, Surprise, Interestingness, Attention, Curiosity, Creativity, Art, Science, Music, Jokes. In G. Pezzulo, M. V. Butz, O. Sigaud, G. Baldassarre, eds.: Anticipatory Behavior in Adaptive Learning Systems, from Sensorimotor to Higher-level Cognitive Capabilities, Springer, LNAI, 2009. PDF.

J. Schmidhuber. Simple Algorithmic Theory of Subjective Beauty, Novelty, Surprise, Interestingness, Attention, Curiosity, Creativity, Art, Science, Music, Jokes. Journal of SICE, 48(1):21-32, 2009. PDF.

J. Schmidhuber. Art & science as by-products of the search for novel patterns, or data compressible in unknown yet learnable ways. In M. Botta (ed.), Multiple ways to design research. Research cases that reshape the design discipline, Milano-Lugano, Swiss Design Network - Et al. Edizioni, 2009, pp. 98-112. PDF.

J. Schmidhuber. Artificial Scientists & Artists Based on the Formal Theory of Creativity. In Proceedings of the Third Conference on Artificial General Intelligence (AGI-2010), Lugano, Switzerland. PDF.

J. Schmidhuber. Formal Theory of Creativity, Fun, and Intrinsic Motivation (1990-2010). IEEE Transactions on Autonomous Mental Development, 2(3):230-247, 2010. IEEE link. PDF of draft.

P. Redgrave. Comment on Nature 473, 450 (26 May 2011): Neuroscience: What makes us laugh.

J. Schmidhuber. A Formal Theory of Creativity to Model the Creation of Art. In J. McCormack (ed.), Computers and Creativity. MIT Press, 2012. PDF of preprint.